I recently spent some time with Paul Fleck, President of DataSpeed Inc., at a closed circuit in France to talk through his company’s technology.

One of the technologies developed by his Michigan-based company has echoes from an earlier part of Paul’s career. With a background in electrical engineering as a drive-by-wire specialist, Paul cut his professional teeth in the 1990s Ford-run F1 outfit Benetton, working alongside Michael Schumacher in his heyday.

And while fly-by-wire technology was later banned in F1, its evolution has had a lasting impact and is now regularly found in robotics and cars.

DataSpeed has taken the drive-by-wire approach to another level, and provides a multi-point interface to specific donor vehicles, in this particular case, a Ford Mondeo (also known as the Fusion in the US) which can then be used as a base vehicle for which autonomous vehicle software and algorithms can be developed.

Other companies produce similar hardware for other vehicles, of course, and we’ll be looking at those when the opportunity arises, but I’m writing this to put this vehicle into context – this is what you start with before you have an autonomous vehicle: a blank canvas research platform.

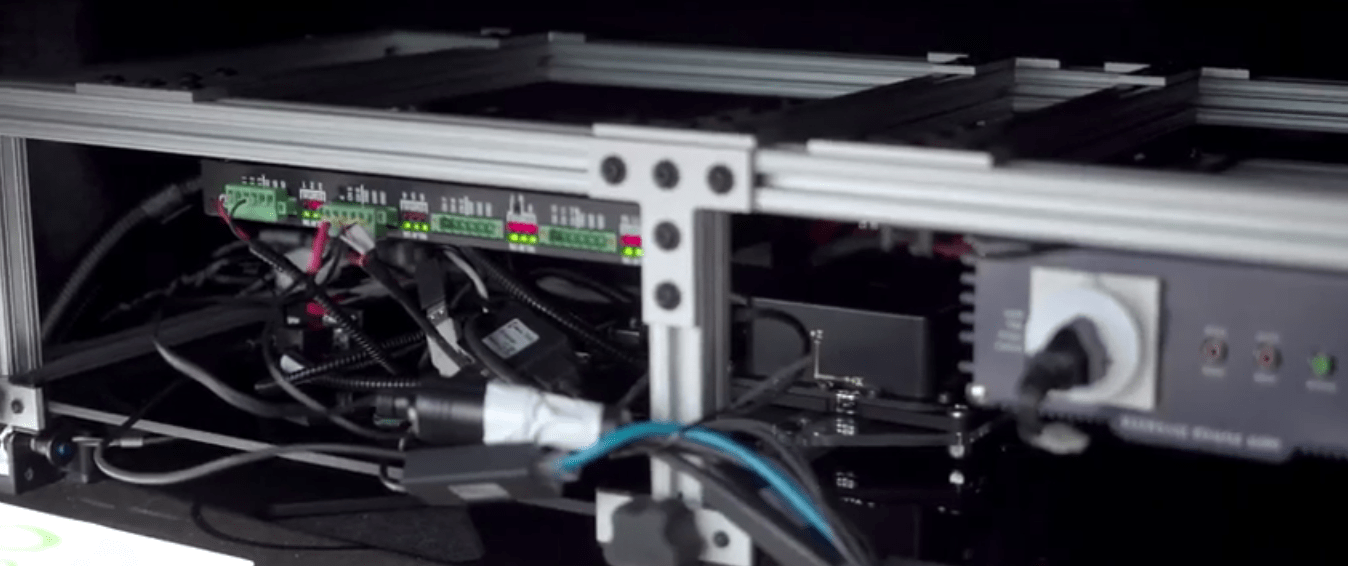

Three obvious bits of equipment sit in the front of the car: a laptop, small touch-screen unit and – somewhat interestingly – an XBox controller. There’s quite a bit more tucked out of sight behind the back seat, and some sensors on the roof as well.

“The touch-screen display enables us to turn power on and off our various electronics, engineers love because if something doesn’t work, they can reset it and it works again magically.”

“The laptop is acting as the computing platform bringing the joystick commands and converting them to a protocol on the CAN network, which in turn interfaces with our drive-by-wire system on the vehicle and that’s how we’re able to control it – using an Xbox controller.”

The laptop has some of DataSpeed’s own software running on a version of Linux called Ubuntu, along with RViz, part of the ROS system often found in robotics development.

Paul enables the computer control by hitting two buttons on the vehicle’s steering wheel, and a number of codes flash across the laptop’s screen. Paul flicks a few buttons on the Xbox controller, showing the brakes, throttle and steering control while the vehicle is parked, before we started to drive off.

“Normally, one person shouldn’t be the safety driver and controller. How we’ve developed the system means that we can easily hand control back to the driver, or the driver can take control back whenever they want.”

Paul pointed out that the use of an XBox controller isn’t a core feature, it’s there to show off the control systems and interface with the actuators already in the vehicle.

“We’re marketing to engineers that need a development platform, they’ll put their computer in the back, a sensing system and then some software, then they can get started”

The handover problem sounds small and simple, but it’s quite complex. Even Ford recently announced they were scaling back plans for partially autonomous vehicles and instead focusing efforts on fully autonomous vehicles, partly before engineers were relaxing too much to be able to regain control of the vehicle safety when prompted. This reflects findings from various studies run in simulators at the University of Southampton which highlighted the delay between alerting the driver and successful handover as ‘alarmingly slow’ – but these are human problems, not engineering problems… hence Ford’s interest in removing the human completely. No human, no handover problem.

“In 3 seconds, you can travel 200 feet, at speed.. “ says Paul, “remember, if the autonomous computer is handing back control it means that it does not understand what’s going on, and what you’re asking a human to do in that period of time is: 1) switch context from relaxing crossword to driving a car; 2) understand what the threat is, and then; 3) do an evasive manoeuvre.. and I’m not sure humans are capable of doing that.”

It’s also clear from the use of a computer game controller that it wouldn’t be a great stretch to find control methodologies in computer games to work with this system as a route to simulating environments and developing algorithms, for example Microsoft’s recent release of sensor simulation software for drones, or OpenAI’s release of a driverless car simulator in GTA V.

Here’s a contradiction in terms: How can you be a petrol-head and passionate about autonomous vehicles?

“Well, it’s still going to have an engine… when you’re a petrol head, you’re probably more technical, into new technology and there’s a lot of new technology in autonomous vehicles.”

“I’ll be honest, this car right here is probably more sophisticated than an F1 car right now, it’s hybrid, highly engineered, there’s a lot of complex electro-mechanical systems, a lot of technology just in this vehicle. Unfortunately, I think the UK would be further ahead in ‘by-wire’ today had the FIA not banned that, because all that technology was being developed in England – I was involved with that over 20 years ago.”

At this point, Paul suggested we switch into navigation using GPS, showing a readout from the pair of NovAtel GPS receivers mounted on the roof, indicating latitude and longitude, as well as the standard deviation (i.e. the level of accuracy derived from the signals before further processing or sensor input) of about 30cm.

At this point, Paul suggested we switch into navigation using GPS, showing a readout from the pair of NovAtel GPS receivers mounted on the roof, indicating latitude and longitude, as well as the standard deviation (i.e. the level of accuracy derived from the signals before further processing or sensor input) of about 30cm.

GPS has weaknesses, between electromagnetic atmospheric disturbances, and particularly in the UK, woodland and rain. It also needs a lot of computing power. Ever wondered why your satnav gets hot, or your phone eats battery power so quickly when you’re using it for navigation? It’s all about the maths.

You see, while GPS signals travel at the speed of light, they are coming from a long way away. By the time the signal has traveled the distance from multiple transmitters to one static point, both the direction and distance to the target will have shifted. Add to that the fact the Earth is moving… and the receiver unit is moving too, and you start to wonder how anyone made it work in the first place. Doubling up on that level of calculation several times to get the accuracy needed thus becomes very difficult. That’s not all, there’s more…

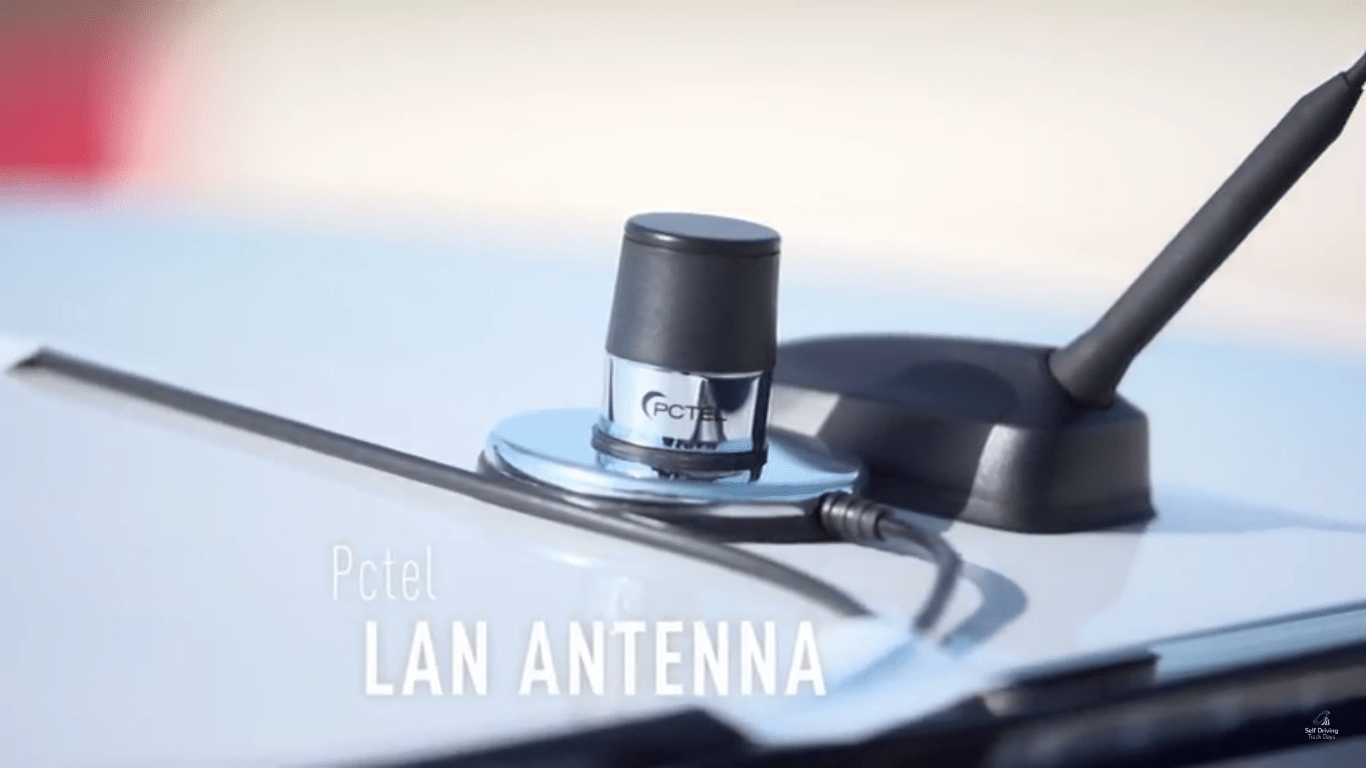

“GPS is a line of sight modality, it’s not just vulnerable to bad weather and trees. In cities you get the urban canyon effect, so you don’t get a lock on enough satellites to get a path because the signal can’t go through buildings, which is why we also add inertial measurement – an IMU” explains Paul.

“For this circuit, if I just wanted to drive around, that’s more than enough. If we can hook up to the cellular network, and use the RTK (real time kinematics) we can get that down to around 3cm, but you’re probably not going to use your GPS for on-road detail navigation, you’d use your LiDAR and camera systems to see curb details”

To demonstrate the benefit of the LiDAR, on this vehicle a Velodyne puck mounted in the middle of the roof, we parked up by the edge of the circuit, near some trees, a tyre barrier and on old gateway.

LiDAR uses infrared laser-focused beams which shoot out, invisible to the naked eye, in a straight line. Because these are photons (albeit not visible to humans), they can be blocked, and an obstacle in the way will cast a shadow, blocking from view anything behind the obstacle.

To create a map, whether for following a path in real-time or recording a 3D environment for future use, you need obstacles fairly close-by for the laser to hit. That’s why we’re at the edge of the circuit, rather than in the rather flat, featureless centre. In an urban environment, there would be more buildings, pedestrians and objects in general.

“For the LiDAR to work for SLAM (simultaneous localisation and mapping), we need things for it to see – we can see the wall there, even the foliage on the tree – if we humans can see that and understand it, then a computer can. We can record all this as point cloud data in a file, at the same time we can drive in a small circle in this area – relative to the objects around us. We’re also recording the speed as well.”

This video grab below shows output from the LiDAR displayed on the laptop. The arrow heading in front of the car is the shadow cast by me, acting as an obstacle to the infrared laser beam – behind that shadow, everything is invisible. This is the main reason why multiple sensor systems are used. The GPS readout is visible on the left of the screen.

Paul replayed the recording, and I watched as he withdrew his hands from the wheel and feet from the pedals and we moved away, the laptop in front of me clearly showing the point cloud that we’d recorded just moments previously, with tree branches, barriers and cones all mapped out in 3D.

The mapped path was displayed on the laptop as a thin purple line drawn out ahead of a small car icon, with a few blue blobs immediately in front of the icon to indicate the exact position that was being calculated at that moment.

“There’s a lot of maths to do this properly” Paul highlighted, as the laptop continued to warm my knees. “But take the GPS, LiDAR, put a camera on, tie in your GPS to a map database, you’re ready to start developing your fully autonomous vehicle.”

OK, so we’re all set? Not so fast. Actually, you need more than just a development platform.

“You need engineers – Mechatronics, by-wire systems, design and build of sensor systems, mechanical engineers for integration, probably the most difficult is the algorithms – the type of software that’s being developed is not web development… it’s highly maths based, and implementing high level maths as algorithms in a programming language is hard. In a development environment, we’re using ROS and certainly Python, C and C++, but for production you’re always going to be looking at safety rated operating systems, something like VX Works, an i7 platform with an NVidia processor for vision, safety rated and validated – the software is the most difficult part for both R&D and the transition to production”

Next up on our ranging conversation was testing. So much has been made of building physical environments for testing vehicles, every country has test tracks and many manufacturers and even suppliers do too.

“Doing testing like this is expensive. You can only run so many scenarios. Putting together simulation environments and doing 100,000 variations and use-cases is very important, you’re not going to be able to do that manually.”

“Engineers make assumptions, we don’t design anything to fail, but look at Apollo 13 or the Shuttle programme, it’s the edge cases that crop up and cause problems. We’ll minimise bad things happening with autonomous vehicles, I don’t think you’ll ever eliminate them, but overall driving will be safer.”

The average age of cars on the road is about 10 years, even if all vehicles are made autonomous ‘tomorrow’, it’ll be ten years before we get 50% penetration. But that won’t happen for at least a decade, so already we’re at 20 years and that’s only half. For the other half, at least another decade, taking us to almost entirely autonomous by 2047.

I asked if Paul could envisage a future that we’ll only be allowed to drive on a track, an exotic past-time like we see flying today?

“I drive an Aston Martin, I don’t want that to be autonomous, I want the experience of driving a vehicle like that. I don’t want the computer to control it, I want to be the driver!”

Nobody really knows. We can all gaze wistfully into the future, but any type of improvement to safety on roads must be taken seriously.

“Within the next 10-20 years we’re going to see a large amount of transformation in the transport and mobility industry. Look what Uber and Lyft have done to the use of the Taxi and the Taxi driver, with ride-sharing.”

“One of the things in the automotive industry, what the OEMs have to pay attention to, is that utilisation of the vehicle is around 5%, something with a value of tens of thousands is just sitting in the driveway. Uber drivers aren’t just using their own cars for themselves or their rides, they’re opening the opportunity for fewer cars in the future.”

The final demo of the afternoon was a high-speed circuit, and we recorded a circuit at a fairly brisk pace – certainly not race speed or particularly close to what anyone would call a racing line, but if you happen to have access to a closed circuit, it would be rude not to put your foot down just a bit.

Paul made a point of saying that the car’s replay of the lap would highlight problems that many driverless vehicles have, and that I highlight above as well: compute power. This was, he reminded me, a ‘blank canvas’ R&D platform, not a supercomputer-equipped production model.

Remembering back to the path prediction line plotted by the laptop, each time we drove at enough speed to catch up with the planned path (as calculated against the GPS plot) the car lost accuracy, but remember, we’re in a far from ideal environment and only using one of the systems – many more would be essential for a robust road or race experience.

Deliberately pushing limits of a vehicle’s technical capabilities is bound to end in problems, but that’s the nature of R&D – and it’s very different to what anyone should expect to see in production vehicles.

Paul will be bringing his vehicle to the Self Driving Track Days events in April (UK) and July (Austria).

If you’ve found this article interesting, you can find out more and take part at one of our upcoming meetups, or attend one of our one-day introductory workshops.

Paul will also be on-site during AutoSens US, which is taking place in May at the M1 Concourse in Detroit, Michigan.

AutoSens is our flagship project, and the world’s leading vehicle perception conference and exhibition. Aimed at engineers already working in industry, it sits alongside the Self Driving Track Days project and provides us with great access to industry experts around the world.