IDTechEx attended AutoSens 2019 in Brussels. The event took place at the car museum in Brussels. This event is focused on all future vehicle perception technologies including LiDAR, radar, and camera. It focuses on the hardware side as well as on the software and data processing side. This is an excellent event with a high quality of speakers drawn from established firms as well as start-ups working to advance automotive perception technologies. In general, it is a technology focused event and is designed to be an event by engineers for engineers. The next version of AutoSens will be in Detroit on 12-14 May 2020. In this article, we summarized key learnings around LiDAR technologies.

We attended this event as part of our ongoing research in autonomous mobility as well as all associated perception technologies including LiDARs and radars. Our research offers scenario-based twenty-year forecasts, projecting the rise of various levels of autonomous vehicles. Our forecasts not only focused on unit sales but also offer a detailed price evolution projection segmented by all the key constituent technologies in an autonomous vehicle. Our research, furthermore, considers the impact of shared mobility on total global vehicle sales team, forecasting a peak car scenario. For more information please see the IDTechEx Report “Lidar 2020-2030: Technologies, Players, Markets & Forecasts”. This report also covers all enabling technologies including LiDAR, radar, camera, HD mapping, security systems, 5G, and software.

Furthermore, we have in-depth and comprehensive research on lidar and radar technologies. Our report “Lidar 2020-2030: Technologies, Players, Markets & Forecasts” offers a comprehensive analysis of 106 players developing 3D LiDAR for the ADAS and autonomous vehicles market. It provides detailed quantitative technology benchmarking and histogram, highlighting key performance trade-offs and constraints as well as key technology trends. The trends considered are in measurement principle (ToF vs FMCW), wavelength of choice (1550nm vs 905nm or other), beam steering (mechanical rotation, MEMS, non-mechanical rotation, liquid crystal waveguides, LCoS, LC metasurfaces, Si or Si3N4 photonics, and so on), photodetector, laser source, and so on. The report offers interview-based detailed company profiles, realistic technology roadmaps and analysis, and market projections segmented by application showing the rise and fall of various LiDAR technologies.

ESPROS Photonics: using high performance CCD to improve the distance vs. noise trade-off

ESPROS Photonics presented their time-of-flight (ToF) LiDAR measurement mechanism (905nm laser). ESPROS is a fully developed IC design and production company headquartered in Swiss with an additional design centre in China.

The challenge that ESPROS seeks to address is the trade-off between measurement time, which relates to distance, and signal-to-noise ratio (SNR). In order to await the arrival of the signal from afar, the image sensor must be kept open for a long time. In turn, this translates into large accumulated ambient signal, which causes shot noise. The shot noise grows with the square of the integration time of the ambient time, whereas the strength of the signal falls with the square of the distance. As such, ambient shot noise can easily mask background signals when measuring long distances.

To overcome this challenge, one can always boost the laser output, but that is difficult. The proposed solution is illustrated below. It is to use a CCD sensor to essentially transfer the measured photons pixel-by-pixel through the CCD array. Here, once a laser is shone, the appropriate measurement column is selected for 5ns. The light is captured and converted into charges. The charges are then sequentially carried through the CCD array. The process is then repeated. This way the total exposure time is 5ns thus reducing capture ambient light by a factor of 360. To achieve this, ESPROS is proposing a monolithic CCD/CMOS technology with a QE of >70% at 905nm and a pixel rise time of <7nm.

SiLC: fabless photonics firms designing a FMCW 1550nm LiDAR vison sensor

SiLC is a fabless company established in 2018 in California to develop single-chip frequency-modulated-continuous-wave (FMCW) LiDAR operating at 1550nm.

The 1550nm choice is important. It is beneficial because the maximum allowed operating power at this wavelength is 40x higher than the level at 905nm. The solar irradiance is 3x lower than at 905nm, and it has a substantially better performance in rain, snow, wet surfaces. The consequence of selecting 1550nm is however that more complicated non-silicon laser and detection technologies will be required.

SiLC is designing its chips based on FWCM. This is similar to the principle used in today’s automotive radars. In this arrangement, an up-and-down chirp (frequency ramp) is generated. The delay in the returning signal – due to velocity and range – manifests itself as a frequency shift which can be measured by mixing the returning signal with the local signal to measure the differential beat frequency (much lower frequency than chirp signal).

FMCW has many clear advantages over time-of-flight (ToF) systems which are most common today. The FMCW includes instantaneous velocity data, whereas ToF derives its velocity information algorithmically by looking at two or more measurements. The LiDAR FMCW only receives coherent returning photons, thus making it intrinsically less prone to inference from other LiDAR sources. The LiDAR interference can become a challenge as more vehicles become equipped with LiDARs. In ToF, some amplitude modulation will be required to do signal coding. This, however, comes at the expense of SNR since SNR is proportional to peak power. Consequently, FMCW at 1550nm can operate at much lower power levels compared to ToF at 905nm or shorter (0.4W vs. 100W). The SNR can be 10x higher and the range resolution and precision can be controlled with chirp length.

FMCW however comes with significant design and manufacturing complexity. First, a tuneable narrow-bandwidth laser source is required. At 1550nm the choice is likely to be InP. This will need to be placed in some cavity and linearized with an on-board drive circuitry. A non-silicon photodetector will also be required. In the case of SiLC, this is likely a germanium based detector. We guess that SiLC has its LiDAR chips produced on 8-inch silicon wafers (a Japanese manufacturer).

As far as I understood, the beam scanning module can be off-site, allowing a flexible distributed architecture in which the expensive vision sensor chips are placed within the car in safe spots whilst the beam steering units are distributed. Note that the beam distributing unit can be OPA (they have demonstraed this with silicon OPA) as well as other methods such as MEMS.

The challenge with active OPA is of course the complexity of the design especially if active phase-shifting over two dimensions is required. To achieve a reasonable aperture size (2-3cm), many phase shifters over waveguides (3um or so) will be required.

Interestingly, the ability of FMCW to control the range resolution and precision with chirp bandwidth suggests that high accuracy measurements can be made. This is shown below, arguing that that optical FMCW sensors can offer very accurate measurements, beating other techniques. This can open up non-automotive application. This is important because it might open up revenue steams that do not require advanced beam steering and automotive-grade electronics. It may also need not to wait for the advent of higher levels (>3) autonomy large numbers.

Xenomatix: solid-state flash LiDAR combined with parallax-free 2D monochromic camera

Xenomatix was established in Belgium in 2013. Xenomatix technology is based on <950nm wavelengths (NIR). In this multi-beam LiDAR configuration, thousands of laser beams are simultaneously projected at high frame rates and their reflections are monitored by a cost-effective CMOS photodiode array. The CMOS photodiodes used in XenoLidar are similar to those used in RGB cameras in automotive vision systems. Photodetectors of this type are an established technology with a long product lifetime. The processing algorithms can reject returning signals which are out-of-angle, thus boosting SNR and range.

It first launched its XenoTrack (2015) which utilized laser triangulation to offer mm-level-accurate short-range (15m) measurements for applications such as mapping the road topography. Later it launched XenoLidar (2018) for intercity (50m range with 60deg FOV) and for highway (170-200m range with narrow 30FOV)

Xenomatix is now evolving its offerings with advanced signal processing. The idea is to move it beyond object detection to object recognition. The fact that the same CMOS photodetectors output both a monochromatic 2D image and the LiDAR measurements enables parallax-free alignment.

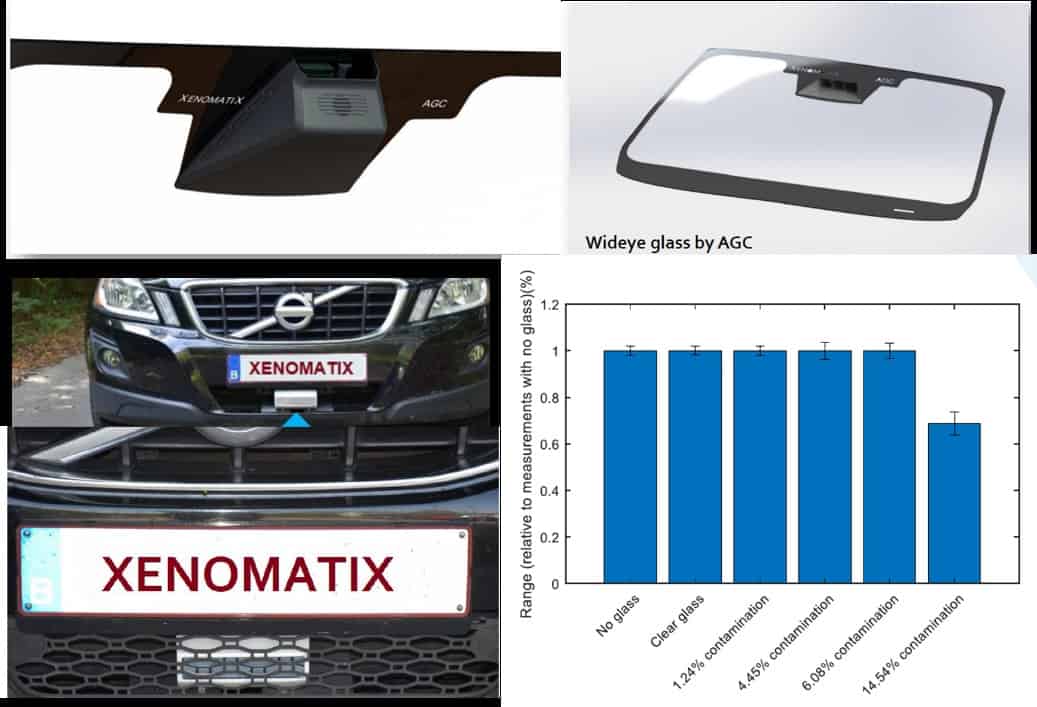

Xenomatix has made progress in trying to integrate its products into vehicles. It has developed behind-window and behind-bumper integrations, as shown below. Importantly, Xenomatix is testing to show that the LiDAR can operate behind a grid or window, and in the presence of heating wires, some mud, and other contaminations. The LiDAR show also detect when the image is too contaminated to trigger automatic washing.

Various lidar-in-vehicle integration approaches developed by Xenomatix. To learn more about all LiDAR technologies see “Lidar 2020-2030: Technologies, Players, Markets & Forecasts” . This report analyses all technology choices and their consequences including ToF vs FMCW measurement, SWIR vs NIR wavelength, mechanical vs MEMS vs photonics OPA vs LCD OPA and other beam steering. It considers all laser sources, photodetectors, and so on. It provides detailed profiles, product and technology benchmarking, and market forecasts segmented by technology.

Blickfeld: a large-sized MEMS mirror to achieve long-range wide FOV LiDARs

They are a start-up (2017) in Munich, Germany. They are developing a MEMS based LiDAR. Their target is to provide a reliable, cost-effective, and scalable products. As such, they select many off-the-shelf-products.

They have designed the LiDAR itself. The MEMS mirror is designed by them. It is a large piezoelectrically-driven mirror (108mm) compared to conventional MEMS mirrors (e.g., 54mm2). The custom drive mechanism compensates for non-linearities of the large MEMS motion which can be more difficult to control. This allows wider FoV and better SNR. There are two LiDARs now available. One has a wider FoV (100×30) but a poorer angular resolution (0.4). The other has a narrower FoV (15×10) but a better angular resolution (0.18).

The company has also selected a laser diode operating at 905nm. The laser diode is easier to operate today and more price competitive than fiber lasers which can offer high performance. The control circuit compensates for temperature-dependency of diode-lasers. The choice of laser diode fits with their design philosophy that the product should be scalable and cost-effective. Their target price is 200-300$/unit.

Blickfeld is setting up an automated line (200k units/year capacity). It is working with Koito (japan) to integrate the LiDARs into headlamps. Blickfeld has a software team to enable the LiDAR data to be pre-processed before leaving the LiDAR unit on its way to the rest of the system.

Outsight: combining SLAM with multispectral and SWIR sensing to combine perception, localization, and semantics

They are a newcomer in the automotive perception business. In fact, they announced their entry in 2019 at the AutoSens. Only the outline of the proposition is clear, and the details are rather scant.

Outsight proposes to offer perception, localization, and semantics. The semantics here means that the sensor can provide information about the material composition, e.g., ice, thus vastly improving with decision-making, e.g., breaktime on ice is long thus must be considered by algorithms

The technology appears to be based on sensing across a range of wavelengths, i.e., hyperspectral sensing. We think the sensor is composed of a RGB camera as well as a InGaAs camera which senses SWIR signals. The signal likely has different laser sources onboard, emitting light at the visible and SWIR ranges. For the latter, we speculate than InP is utilized, but are not certain.

No technical data or product specifications are included. As such, one does not know exact wavelengths of operation, field of view, beam steering technology if any, and so on. The proposition is attractive however more details are required to make an assessment.

Note that the company was born out of Diobotics 3D SLAM which had worked on the software aspects of SLAM technology. All the software assets of Diobotics are incorporated into Outsight.

To learn more about technology and market developments in LiDAR technology please visit www.IDTechEx.com/Lidar.

Written by Dr Khasha Ghaffarzadeh