Algolux discuss the broad deployment of safe and effective autonomous transportation, automated driving, and next-generation advanced driver-assistance systems (ADAS) as a game-changing goal that promises to deliver society-level impact.

Benefits include improved road safety to reduce fatalities and injuries, more efficient transport of people and freight, expanded accessibility for people with disabilities, reduced traffic congestion and pollution, and new business opportunities.

This depends on cutting-edge technologies from a highly-invested ecosystem of OEMs, Tier 1 suppliers, and Tier 2 hardware and software providers. But advancements are required to overcome current limitations that hinder safe and efficient driving, with the most critical enabler of ADAS and autonomous vehicles (AVs) being robust dense depth and perception in all conditions [Figure 1].

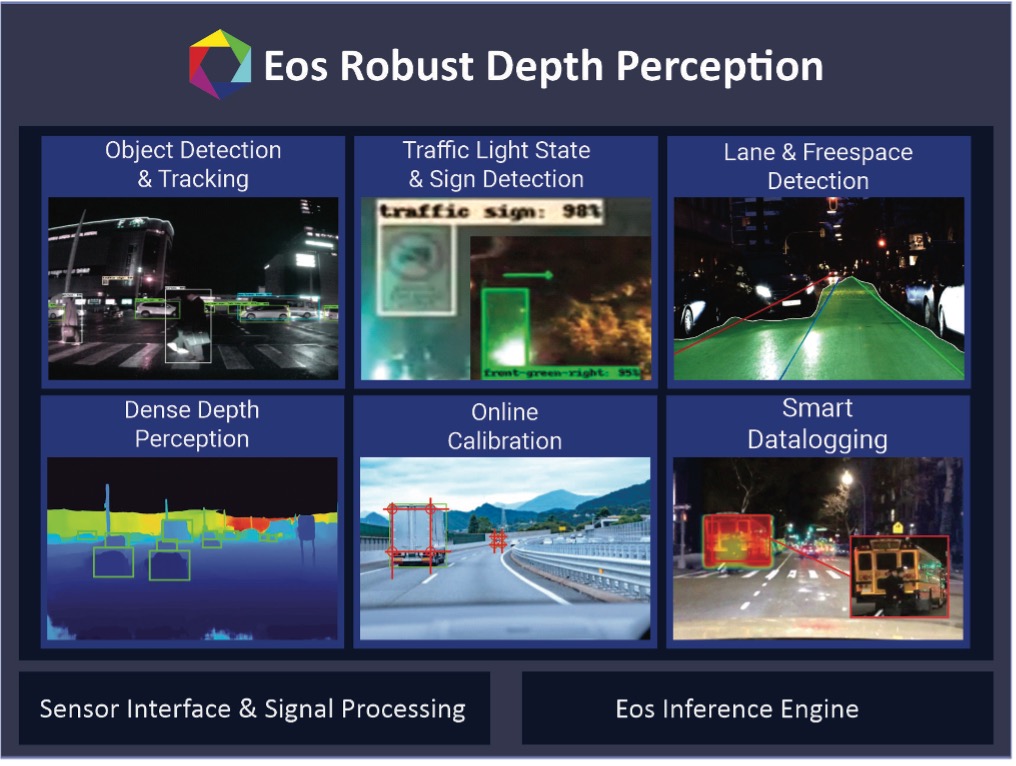

Algolux is excited to share the breakthroughs we’ve made in this space and recently announced its expanded Eos Robust Depth Perception Software to address the fundamental challenges as described below.

“Mercedes-Benz has a track record as an industry leader delivering ADAS and autonomous driving systems, so we intimately understand the need to further improve the perception capabilities of these systems to enable safer operation of vehicles in all operating conditions,” said Werner Ritter, Manager, Vision Enhancement Technology Environment Perception, Mercedes-Benz AG. “Algolux has leveraged the novel AI technology in their camera-based Eos Robust Depth Perception Software to deliver dense depth and detection that provide next-generation range and robustness while addressing limitations of current lidar and radar based approaches for ADAS and autonomous driving.”

The Difficult Reality of Road Safety

Improved ADAS and AV deployment will reduce road traffic injuries. Accidents continue to be a leading cause of death and injuries worldwide, with over 1.3M deaths and between 20-50M injuries estimated annually, and are by far the leading cause of preventable injury ([1] WHO, 2022, [2] NSC, 2022). They are the top cause of death for young people aged 5-29 years and more than half of all road traffic deaths are among vulnerable road users: pedestrians, cyclists, and motorcyclists. This sobering statistic remains high, accompanied by an estimated economic impact of road accidents at 1-2% of a country’s GDP annually.

In the US alone, traffic deaths have reached a 16-year high and pedestrian fatalities reached their highest level in 40 Years (GHSA, 2022), with nighttime pedestrian deaths rising by 41% since 2014. Specifically for trucking, there were nearly 550,000 accidents annually in the US, with approximately 130,000 causing injuries, 100,000 caused by sleep deprivation, and a 54% increase in fatalities involving trucks since 2009.

Growing Truck Driver Shortages

One clear opportunity is commercial transportation. Much has been written about the worldwide truck driver shortage. A new International Road Transport Union study (IRU, 2022) shows an estimated 2.6M unfulfilled driver positions across the 25 countries surveyed, with shortages of 80,000 in the US, nearly 400,000 across Europe, and 1.8M in China. A significant increase is forecast for 2022 and expected to grow due to a lack of skilled workers, an aging driver population, and waning interest in the profession due to quality of life issues. Coach and bus driver populations showed similar trends.

Robust Perception and Depth is Fundamental for ADAS and AVs

The perception stack is the most crucial element of the AV or ADAS system for improving vehicle safety. It ingests sensor data and interprets its surroundings through an ensemble of sophisticated algorithms [Figure 2]. It then delivers the information needed for the vehicle to warn a driver or make good control decisions itself.

For an L2 – L3 (SAE, 2021) driving system to help a driver, or an L4 autonomous vehicle safely drive itself, the perception system must provide robust detection and accurate distance estimation of objects in all lighting and weather conditions (e.g. rain, fog, or snow) and in city or highway routes. It can be constrained to the operational design domain (ODD) where the system was designed to properly operate, such as only during highway travel or only under well-lit daytime conditions. Interestingly enough, the automotive industry calls low light and poor weather “corner cases” despite being common occurrences. It must have sufficient range to provide time for the system or driver to respond to an issue. At highway speeds, this needs to be out to 300m for passenger vehicles and over 500m for Class 8 trucks in order to safely brake or maneuver to avoid an accident.

ADAS sensing today primarily relies on cameras and radar to enable detection and distance estimation for driving functions. Sensor data is processed with AI and classical perception algorithms to detect pedestrians, vehicles, lanes, and signs, and determine position, obstacle range, and velocity. This information is used to deliver driver warning or initiate active control of the vehicle, such as automatic braking to avoid a collision.

AVs have the much more complex objective of enabling driverless operation across the vehicle’s ODD. The vehicle is now responsible for correct and safe operation rather than a driver. As such, companies have heavily invested in vehicle architectures with extensive and costly sensor suites that include many cameras, radars, numerous lidar, and AI software autonomy stacks that handle the full operation of the vehicle. Recently, AV leaders have begun deploying vehicles for driverless paid rides, long-haul freight, and last mile deliveries on the path toward commercial viability.

But there is still a road ahead for these companies to cost-, power-, and performance-optimize the hardware and software to achieve scalable effective deployment.

The unfortunate reality is that many of today’s production ADAS systems fail under the “corner case” scenarios and even struggle in good conditions, as highlighted again by the AAA (AAA, 2022). While much more capable than their ADAS counterparts, AV platforms are also hampered by the same challenges and in reality are still in the prototype and testing stage.

This accuracy and robustness gap continues to be confirmed in benchmarks during Algolux’s OEM and Tier 1 customer engagements. These ADAS / AV systems have a reduced ability to properly detect objects and estimate depth in driving scenarios where there is high sensor noise, low contrast, occlusions, and dealing with previously unseen objects and orientations.

Addressing Key Perception and Depth Gaps

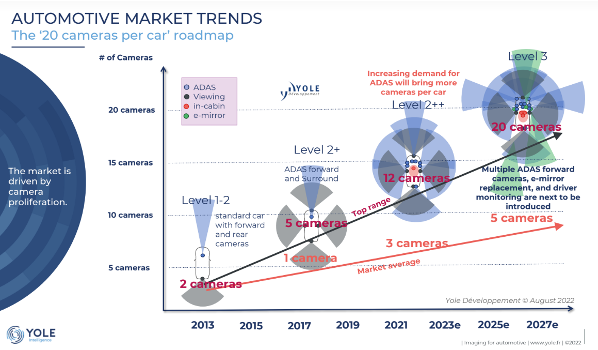

Cameras are the predominant external sensor for ADAS and these L2 – L3 systems are expected to use up to 20 cameras (Yole Developpement, 2022) from 1.3MP to 8MP. They are also an increasingly important component in an AV system’s sensor suite [Figure 2].

Cameras

Pros

- Best sensor for accurate object detection

- High resolution, color, and dynamic range

- Easy to mount and deploy

- Mature automotive sensor

- Low cost

Cons

- Poor low light and poor weather conditions

- Large amount of data to process for vision

- Lengthy manual image signal processor (ISP) tuning

- Low to mediocre mono / short-baseline stereo depth accuracy

- Calibration challenges

Radar

Radar is an active sensor that has been used in automotive for many years to determine object distance, velocity, and direction out to 200-300m. Recent “4D” radars deliver more details about objects, structures and road contours.

Pros

- Good depth accuracy

- Mature automotive sensor

- Highly robust to poor lighting and weather

Cons

- Poor choice for distinguishing and classifying objects

- Can be subject to interference and reflection issues

- Low resolution and field of view

- More costly than cameras

LiDAR

Lidar has been a very popular active sensor for autonomous vehicles and robotics and the latest lidars have a practical effective range of 150-200m. While much initial work on autonomy was based on lidars, it is still a new sensor type with low deployed volumes.

Pros

- Accurate depth information

- More angle resolution than radar

- Robust to low-light conditions

Cons

- Poor robustness in weather (snow, fog…) dramatically reduces effective range

- Lower resolution than cameras that reduce with distance and cannot discern color

- Poor choice for distinguishing and classifying objects

- High cost

Perception system developers use AI approaches to develop the inference models needed for each sensor modality. This typically requires a massive amount of accurate human annotated data for training and testing which is extremely time consuming and expensive. There also is an underlying bias for data that is easy to capture and annotate. For example, it’s much easier to schedule and capture images during clear sunny conditions vs. foggy or snowy evenings, which can also be up to 10x more costly to annotate. More recent unsupervised approaches and simulated data improve this but there is still a significant burden.

While there probably won’t be one “magic” sensor to deliver next generation perception capabilities, there are clear targets that need to be achieved:

- Robust perception for all driving tasks in all conditions

- Highly accurate object detection with depth estimation

- Dense long-range depth estimation well beyond 500m

- Dense 3D representation and detection of all obstacles in the scene

- Flexible sensor configurations for any vehicle type and mounting position

- Real-time adaptive online calibration for multi-camera configurations

- Low system development and deployment costs

Algolux has been a recognized pioneer in the field of robust perception software for ADAS and AVs and has applied its novel AI technologies to deliver dense and accurate multi-camera depth and perception. It has validated and deployed solutions for both car and truck configurations with leading OEMs and Tier 1 customers.

Its recently announced Eos Robust Depth Perception Software resolves the range, resolution, cost, and robustness limitations of the latest lidar, radar, and camera based systems combined with a scalable and modular software perception suite [Figure 3

Lidar has been a very popular active sensor for autonomous vehicles and robotics and the latest lidars have a practical effective range of 150-200m. While much initial work on autonomy was based on lidars, it is still a new sensor type with low deployed volumes.

Perception system developers use AI approaches to develop the inference models needed for each sensor modality. This typically requires a massive amount of accurate human annotated data for training and testing which is extremely time consuming and expensive. There also is an underlying bias for data that is easy to capture and annotate. For example, it’s much easier to schedule and capture images during clear sunny conditions vs. foggy or snowy evenings, which can also be up to 10x more costly to annotate. More recent unsupervised approaches and simulated data improve this but there is still a significant burden.

While there probably won’t be one “magic” sensor to deliver next generation perception capabilities, there are clear targets that need to be achieved:

- Robust perception for all driving tasks in all conditions

- Highly accurate object detection with depth estimation

- Dense long-range depth estimation well beyond 500m

- Dense 3D representation and detection of all obstacles in the scene

- Flexible sensor configurations for any vehicle type and mounting position

- Real-time adaptive online calibration for multi-camera configurations

- Low system development and deployment costs

Algolux has been a recognized pioneer in the field of robust perception software for ADAS and AVs and has applied its novel AI technologies to deliver dense and accurate multi-camera depth and perception. It has validated and deployed solutions for both car and truck configurations with leading OEMs and Tier 1 customers.

Its recently announced Eos Robust Depth Perception Software resolves the range, resolution, cost, and robustness limitations of the latest lidar, radar, and camera based systems combined with a scalable and modular software perception suite [Figure 3

You can learn more at www.algolux.com or contact Algolux at [email protected].

Sources

[1] World Health Organization (WHO), Road Traffic Injuries fact sheet (2022) https://www.who.int/news-room/fact-sheets/detail/road-traffic-injuries

[2] National Safely Council reference of World Health Organization (WHO), Global Health Estimates 2019: Deaths by Cause, Age, Sex, by Country and by Region, 2000-2019 (2022) https://injuryfacts.nsc.org/international/international-overview/

[3] Governors Highway Safety Association (GHSA), Pedestrian Traffic Fatalities by State 2022 https://www.ghsa.org/resources/news-releases/GHSA/Ped-Spotlight-Full-Report22

[4] IRU, Driver Shortage Global Report 2022 https://www.iru.org/system/files/IRU%20Global%20Driver%20Shortage%20Report%202022%20-%20Summary.pdf

[5] SAE International, SAE Levels of Driving Automation™ Refined for Clarity and International Audience (2021) https://www.sae.org/blog/sae-j3016-update

[6] American Automobile Association (AAA), Active Driving Assistance System Performance May 2022 https://newsroom.aaa.com/2022/05/consumer-skepticism-toward-active-driving-features-justified/

[7] Yole Developpement, Computing and AI Technologies for Automotive 2022