IDTechEx attended AutoSens 2019 in Brussels. The event took place at the car museum in Brussels. This event is focused on all future vehicle perception technologies including lidar, radar, and camera. It focuses on the hardware side as well as on the software and data processing side. This is an excellent event with a high quality of speakers drawn from established firms as well as start-ups working to advance automotive perception technologies. In general, it is a technology focused event and is designed to be an event by engineers for engineers. The next version of AutoSens will be in Detroit on 12-14 May 2020. In this article, we summarize key learnings from talks pertaining to radars and camera systems. In another article, we summarized key learnings around lidar technologies.

We attended this event as part of our ongoing research in autonomous mobility as well as all associated perception technologies including lidars and radars. Our research offers scenario-based twenty-year forecasts, projecting the rise of various levels of autonomous vehicles. Our forecasts not only focus on unit sales but also offer a detailed price evolution projection segmented by all the key constituent technologies in an autonomous vehicle. Our research, furthermore, consider the impact of shared mobility on total global vehicle sales team, forecasting a peak car scenario. For more information please see the IDTechEx report “Automotive Radar 2020-2040: Devices, Materials, Processing, AI, Markets, and Players”. This reports also covers all enabling technologies including lidar, radar, camera, HD mapping, security systems, 5G, and software.

Furthermore, we have in-depth and comprehensive research on lidar and radar technologies. Our report “Automotive Radar 2020-2040: Devices, Materials, Processing, AI, Markets, and Players”, develops a comprehensive technology roadmap, examining the technology at the levels of materials, semiconductor technologies, packaging techniques, antenna array, and signal processing. It demonstrates how radar technology can evolve towards becoming a 4D imaging radar capable of providing a dense 4D point cloud that can enable object detection, classification, and tracking. The report builds a short- and long-term forecast models is segmented by the level of ADAS and autonomy.

Arbe Robotics: high-performance radar with trained deep neutral networks

Radar technology is already used in automotive to enable various ADAS functions. This technology is however changing fast. A shift is taking place towards smaller node CMOS or SOI chips, enabling higher monolithic function integration within the chip itself. The operational frequency is going higher with benefits in velocity and angular resolutions. The bandwidth is widening, improving range resolution. Large antenna arrays are being developed, dramatically improving the virtual aperture size and angular resolution. The point cloud is also densifying, potentially enabling much more deep-learning AI. These trends will lead to 4D imaging radar which can be considered an alternative to lidars.

Arbe is an Israeli company that is at the forefront of some of these trends. It is unique in that it has set out to design its own chip and to develop its own algorithms for radar signal processing. Its chip will be made by Global Foundry on a 22nm depleted SOI technology (likely in Dresden Germany). The choice of 22nm is important. On the frontend, it allows boosting the frequency up to the required level. On the digital end, it enables the integration of sufficiently advanced modules.

The architecture has 48Tx and 48Rx. Here, most likely 4 (perhaps 5) transceiver chips are used. An in-house processor, likely on the same technology node, is designed to run the system and process the data to output a 4D point cloud. The IC will likely to be ready later this year. This radar aims to achieve 1deg and 2deg azimuth and elevation. The HVoF and VFoV are 30 and 100deg respectively. Range is suggested as 300m.

Arbe is unique in that it is not just focused on the hardware design. It is also focused on processing the data that comes off its advanced radar. Arbe is developing what it terms the arbenet. The very high-level representation of the arbenet is shown below. It might be that Arbe needs to do this to demonstrate what is possible with such radars. Thus, the hardware design will likely remain the core competency.

Arbe is working on clustering, generation of target list, tracking of moving objects, localization relative to static map, Doppler based classification, etc. It can use doppler gradients to measurement changes in orientation which might give hints about intention. It will do free-space mapping and use the radar for good ego-velocity estimates.

This year is likely to be a critical year for Arbe as many OEMs will make decisions about the sensor suite of choice for the next 3-5 years.

Images showing how radar data can offer object detection, per-frame velocity information, and determination of the drivable path. For more information about evolution of radar technology towards 4D imaging please refer to “Automotive Radar 2020-2040: Devices, Materials, Processing, AI, Markets, and Players” (www.IDTechEx.com/Radar)

Imec: In-cabin monitoring and gesture recognition using 145GHz radar

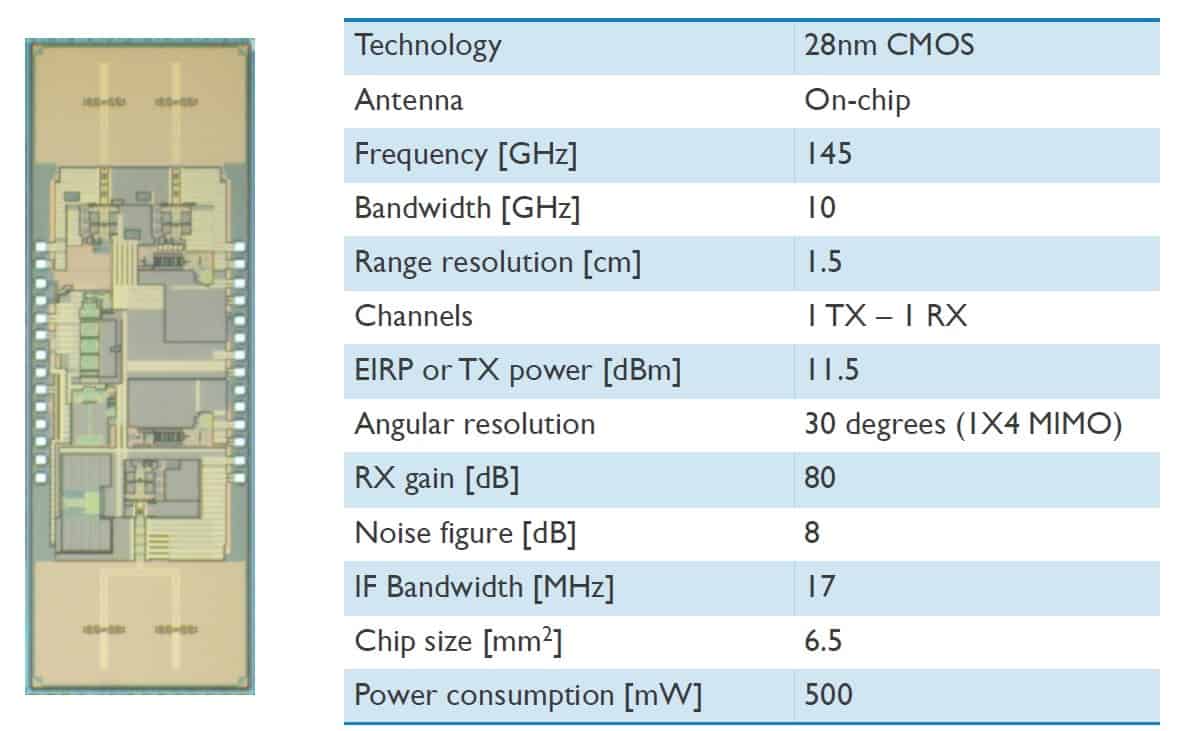

Imec presented an interesting radar development. They have developed a 145 GHz radar with a 10GHz bandwidth. This a single-chip 28nm CMOS SoC solution with antenna-on-a-chip implementation. It is thus truly a radar-in-a-chip solution. The large bandwidth and high frequency operation result in high range, velocity, and angular separation resolution.

The high mm-resolution can enable multiple applications. Imec demonstrated in-cabin driver monitoring. Here, this low-cluster environment includes background vibration, but the target is largely static. As the results below show, this radar can fairly accurately track the heartbeat and respiration rhythm of the driver, enabling remote monitoring. To get this result, additional algorithmic compensations are naturally required. This solution requires no background light and does not compromise privacy.

Imec has also proposed 3D gesture control using its compact (6.5mm2) single-chip solution. Here, the radar transmits omnidirectional and calculates the angle-of-arrival using the phase differences measured at its four different receive antennas. To make sense of the angle-of-arrival estimates, imec is training an algorithm on 25 people and a set of 7 gestures. This technology – the software and the hardware together – can offer true 3D gesture recognition for consumer products.

GEO Semiconductor: In-Camera CV for NCAP Pedestrian Rear AEB using classical computer vision

GEO Semiconductor is a major player in automotive camera modules. It claims to have more than 170 OEM design wins.

NHTSA estimated that in 2015 there were 188k reversing accidents, 12k pedestrian injuries, and 285 deaths in the US. It is shown that passive and active rear cameras can reduce accidents by 42% and 78%, respectively. Consequently, as of May 2018, all new US cars are mandated to have a rear camera.

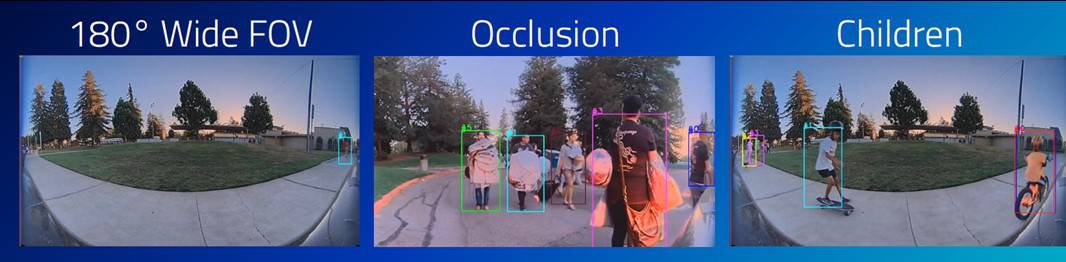

The active rear camera however represents multiple challenges. In particular, the 180deg images can make pedestrians appear small. There are also problems with occlusion and children running into the scene. Some examples are shown below.

Active cameras require image recognition. They need to classify and detect objects. A challenge is that rear camera modules are hermetically sealed therefore they do not dissipate heat well (although one could imagine a formed metallic case with built-in fins). As a consequence, GEO argues, the power consumption levels must be kept to an absolute minimum. It suggests that in the worst case the camera and the process/memory can consume 1.5W and 0.7W, respectively.

Two general approaches exist towards computer vision, as shown below: (1) the classical approach in which the features are handcrafted and extracted using a trained classifier; and (2) the deep learning (DL) approach in which a deep neutral network is trained to extract the results.

The trend is towards DL approaches because it provides much better accuracy, going beyond the performance limits of the classical approach. The challenge though, in this particular case, is that DL approaches consume too much power. This is shown in the table below. Therefore, given the power consumption constrains, the designers continue to use classical approach until such time that the DL approaches become more efficient either through better algorithms or dedicated chip architectures.

Ambarella: short-range obstacle detection using stereo vision

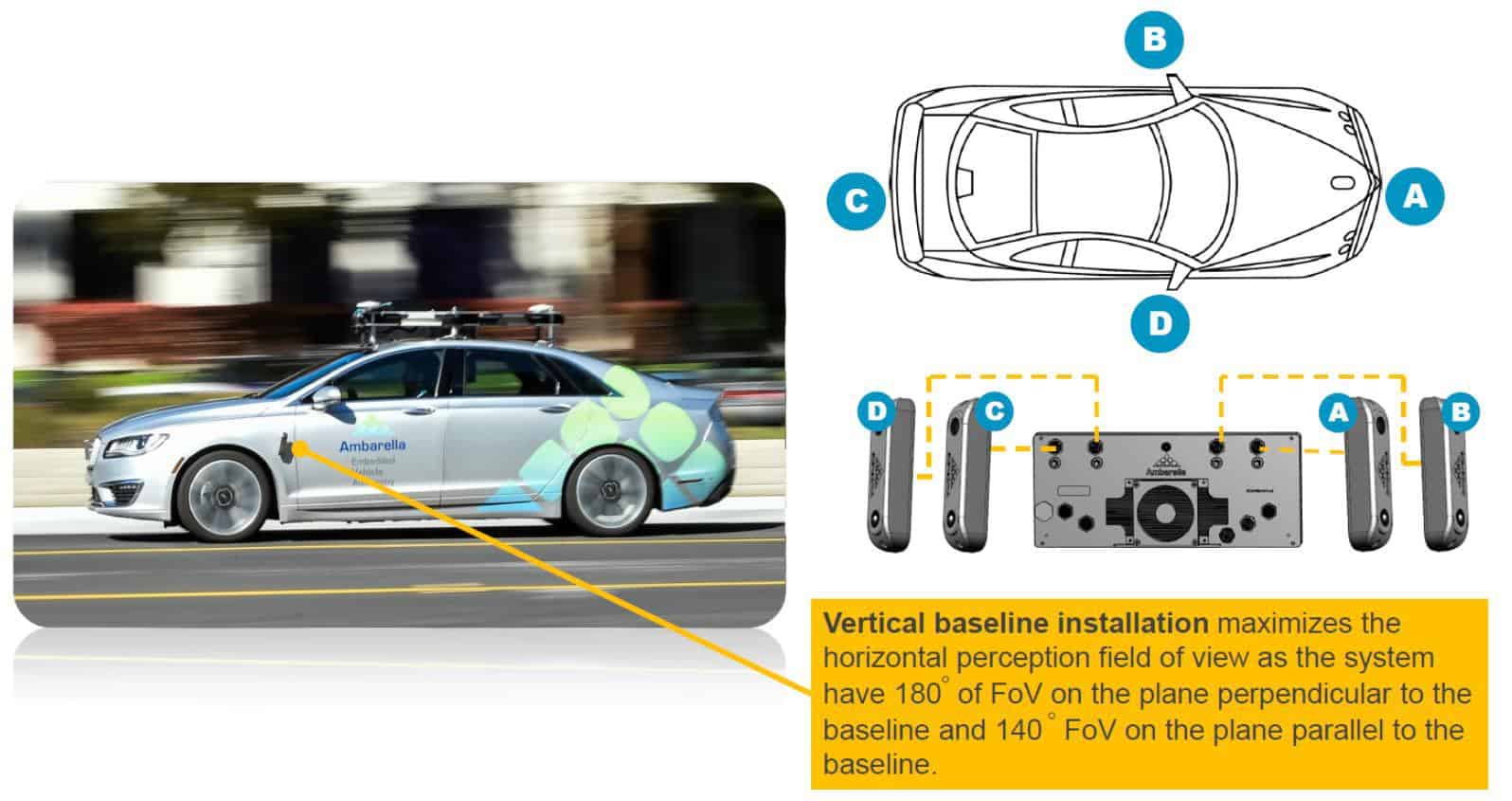

Ambarella is a CA based company. In the autonomous mobility and ADAS sector, they are offering a short-range surround stereo vision system to detect objects in 3D space. The idea is that the system can compete and outperform radar and sonar.

The system consists of stereo cameras located around the vehicle, as shown below, to generate a surround view. The cameras are 8MPx with 10cm baseline and use a 180deg lens. The illumination source is 850nm. The cameras all feed into a central processing ECU which can support 8 cameras and perform object detection calculations at a rate of 30 fps (frames per second). The sensor system has a range of 8-10m.

The company shows results suggesting that the stereo vision outperforms sensor systems, especially when detecting thin bars located close to the vehicle.

Eyeris: In-vehicle Scene Understanding AI for Autonomous and Highly Automated Vehicles

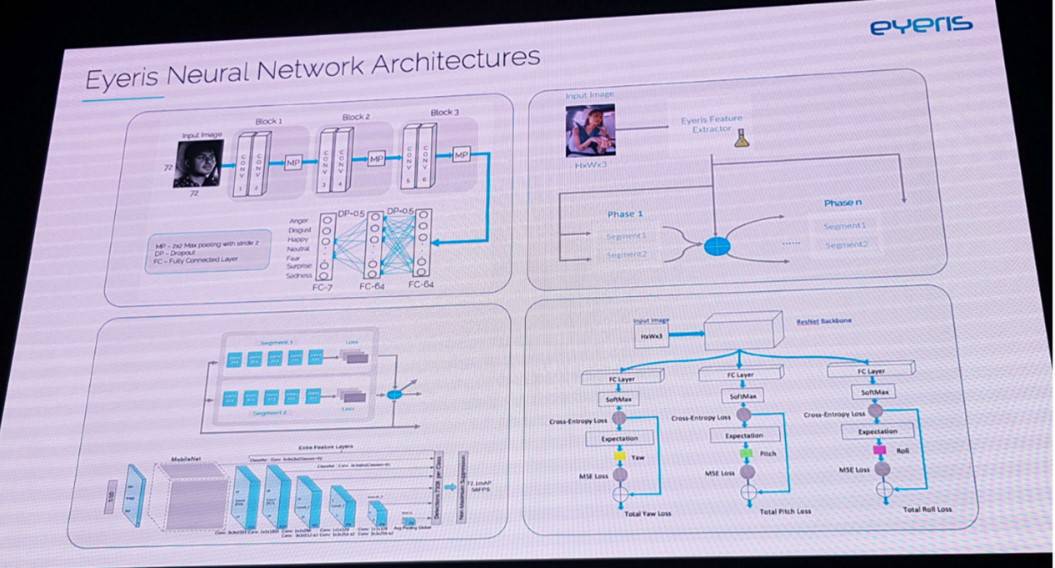

Eyeris has an interesting proposition: to use camera, trained deep neutral network, and specially designed AI processor to offer a detailed semantic understanding of the interior of vehicles. Eyeris argues, with good reason, that in the very long-term autonomous mobility will transform the design of vehicle interiors. As such, there will be a strong motivation to automatically monitor the interior of vehicles

Today, not many firms are focused on this task. Most firms in the autonomous mobility field are focused on leveraging a large perception sensor suite consisting often of cameras, lidars, and radars to develop a detailed understanding of the outside environment. Eyeris proposes to do this for vehicle interiors.

The proposition is to achieve this task using standard 2D cameras which can be located at any location within the cabin. The processing is also to take place on the edge without having to make regular recourse to the edge. This is shown below.

To this end, Eyeris has designed and trained 10+ deep neutral networks inferenced on the edge. To train their DNNs, Eyeris has spent large sums and five years collecting more than 10M images from 3100 subjects. All these images are annotated. Furthermore, they have come up with a unique interior image segmentation method to allow them to understand where everything is relative to each other. The architecture of their various neutral networks is shown below.

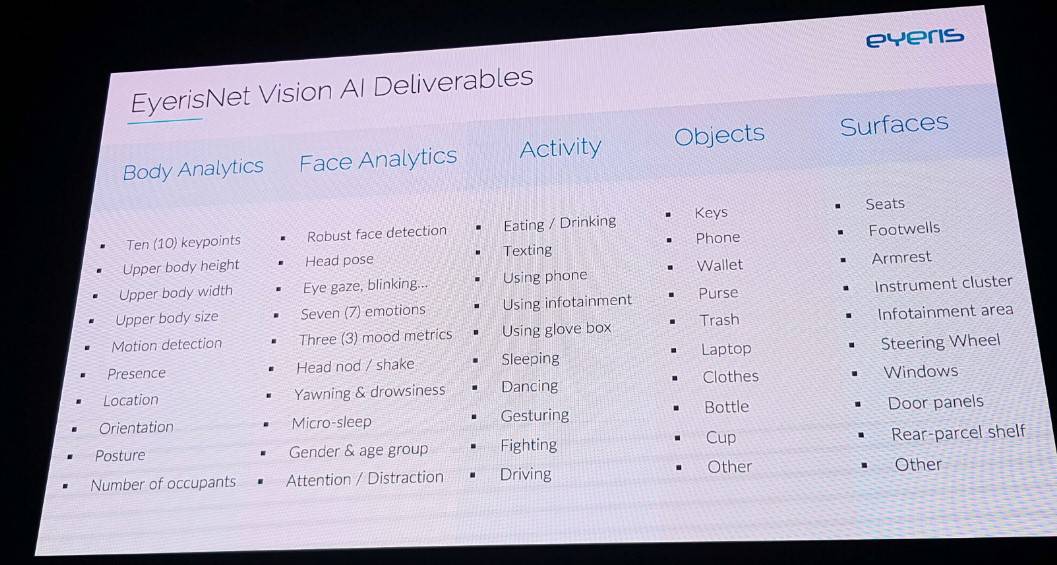

The DNNS will be able to offer many analyses. These include human behaviour understanding based on images of body, face, and the activity; object localization which can yield class, size, and position information; and surface classification which can give pixel maps, surface contours, and shapes.

The so-called EyerisNet Vision AI can deliver the outcomes shown below. This information can then be used in improving safety, e.g., automatic deployment of airbags, drive monitoring, automating the control of conform functions, and many other services.

Eyeris demonstrated its system with On Semiconductor and Ambarella. This joint system includes On Semiconductor’s new global shutter RGB-IR image sensor, Ambarella’s RGB-IR processing SoC, and Eyeris’ in-vehicle scene understanding artificial intelligence.

Eyeris’ DNN architectures have been designed into computer vision-specific AI chip hardware solutions to enable efficient and fast real-time edge calculation. It recently announced the implementation of its DNNS on a 16nm Zynq UltraScale+ MPSoC technology from Xilinx, Inc. Eyeris has also recently (early 2020) announced that it is evolving its AI DNNs beyond just 2D vision to enable sensor vision with data supplied from thermal, radar and camera.

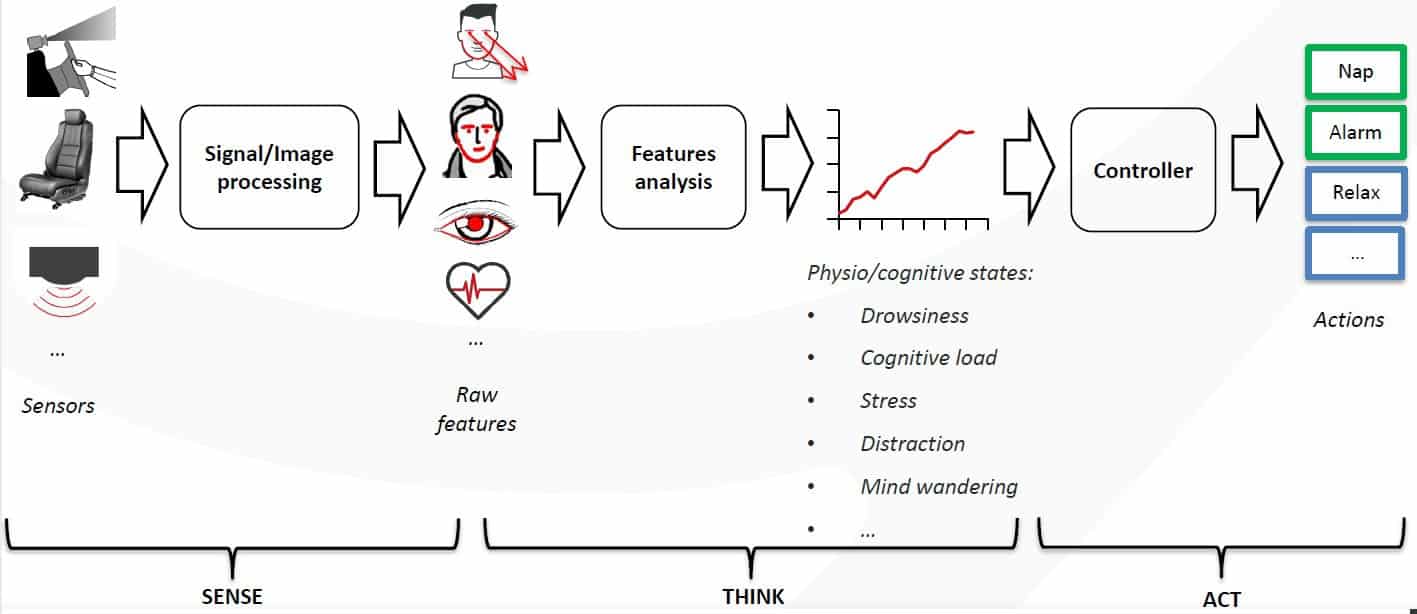

Phasya: software to make judgements about physiological and cognitive state of drivers

Phasya is a firm specialized in offering a software portfolio aimed at monitoring several physiological and cognitive states. Its software is fed ocular and cardiac data. It runs feature analysis on these data to draw judgement about physio/cognitive state of the driver such as drowsiness, cognitive load, stress, distraction, and so on.

The image below shows the set up that Phasya uses to develop its ground truth data. The driver’s conditions are monitored using EEG/EOG during a simulation drive. This ground truth forms the basis Phasya’s algorithms, enabling it to draw physiological and cognitive judgements about the driver.

This type of software is of course essential as driving approaches higher levels of ADAS and autonomy. It helps with safety functions in lower level ADAS and in managing the driver-machine transitions in higher level ADAS and highly-automated driving.

Written by Dr Khasha Ghaffarzadeh

Focus on RADAR technology at AutoSens in Detroit 2020

The AutoSens in Detroit agenda will provide a comprehensive coverage of developments in RADAR for ADAS and AV. Yole Development will be reviewing competing RADAR technologies, including future trends and how RADAR is being affected by the emergence of LiDAR.

Developments in MMwave radar will be covered by General Radar, and CTO Dmitry Turbiner will detail how today’s leading advanced RADAR sensors, pioneered by military aircraft, are now becoming affordable for automotive use. This provides incredibly fine object resolution in 3D, protected by hardened security systems that resist interference and jamming. This approach also allows us to use radar cross sections to identify targets and predict how they will move/behave.

Echodyne will then explore how the AV stack can mimic human sensing capabilities through dynamic allocation of radar resources, drawing on the immense knowledge repositories and other real-time data sources to resolve ambiguity in the driving scene. The talk will include data collected from multiple driving scenes that demonstrate advanced radar imaging resources solving edge and corner cases.

ZF TRW Automotive will present their proposed approach to adapt low cost perception trackers to automotive high-quality Doppler Radars, which could help enable future L2+ AD systems.

Radar sensors can also enable in-cabin monitoring and short range ADAS functions and imec will discuss these applications, including gesture recognition, climate control, health monitoring and audio sectioning.