A key focus area at AutoSens later this month will be image quality – incorporating current and future standards; highlighting the latest technology developments. We’ll be exploring the evolution of image quality in the ADAS camera, and discussing how best to tackle the challenges ahead. As a taster of these discussions, we asked three experts from Sheba Microsystems, indie Semiconductor, and TRIOPTICS for their opinions on specific challenges within image quality.

Improving image quality with MEMS electrostatic actuation technology

We asked Dr. Faez Ba-Tis, CEO at Sheba Microsystems Inc. – the industry is constantly seeking to improve image quality and clarity. Can you describe any innovative approaches or techniques you’ve developed towards this goal for ADAS camera outputs?

The best solution to any problem is the one that tackles its root and not the one that deals with its side effects. Poor image quality, noise, blur are all side effects ensuing from not getting a crisp and sharp image out of the sensor.

In smartphones, we have noticed over the past two decades how the image quality evolved and significantly improved over time. One of the main contributors to this high quality imaging in such a market was the introduction of autofocus actuators for compact cameras, largely, voice coil motors which also helped imaging to go from 2 MP to 10’s MP high resolution imaging.

Unfortunately, such electromagnetic actuators and even other other actuation techniques like piezo are not reliable enough to be used in the automotive environment where temperature exceeds 100 deg and the weight of the optics is very heavy compared with smartphone lenses.

MEMS electrostatic actuation technology using sensor shift approach stand out as a solution with great potential to revolutionize the image quality of ADAS and other autmotive cameras as they solve lens defocus and thermal drift issue providing an optimal image quality in the first place paving the road to higher resolution imaging and use of smaller pixel size in automotive imaging, It also eliminates the need to other solutions that were developed to deal with the blurred images resulting from the thermal drift challenge. MEMS electrostatic actuators are thermally stable, highly precise and achieve active athermalization of automotive cameras in the most efficient way.

AUTOSENS USA 2024 ROUNDTABLE SESSION

The importance of autofocus technology in achieving high-quality, high resolution imaging for ADAS automotive cameras

Addressing challenges in colour accuracy

Pen Ling, VP & Chief Technology Officer – Vision BU, at indie semiconductor is an expert in advanced image processing. We asked him: How does the choice of colour filter array impact colour accuracy and dynamic range in outward-facing cameras for ADAS?

For ADAS applications, camera sensor sensitivity is very crucial, especially for low light performance. From the sensor vendor point of view, a low-cost way to increase the sensitivity of the traditional RGB sensor is to simply replace the Green filter of the sensor Colour Filter Array (CFA) with a Clear filter (or something close, say Yellow filter). While the alternative CFA (such as RCCB, RYYCy, and RCCG) provides significantly better low light sensitivity (about 1.8x of RGGB), it also brings new challenge – color accuracy. It is well-known in the automotive industry that these types of non-RGB CFA sensors suffer from poor Red / Yellow traffic light discrimination. But even this severe drawback cannot stop some major Tier-1 and OEMs from continuing to adopt RCCB, RCCG, and RYYCy sensors in real products. For example, Mobileye uses RYYCy sensor, and Tesla uses RCCB sensor, and we are seeing RCCG also being adopted. That demonstrates how important these non-RBG CFA sensors are for ADAS applications.

It’s been quite a long time that the alternative CFA and/or the related sensor design has been blamed for the root cause of the colour accuracy issue. It even drives some sensor vendors to start to tweak the colour response spectrum for the alternative CFA, especially the red colour channel. And that in turn creates another drawback/challenge – reduced red colour sensitivity, and therefore more sever colour noise. For HDR sensors, the sever colour noise does not just appear in low light condition but also appears in HDR transition region even in bright daylight, and that will be difficult to remove by the traditional noise reduction method.

In recent years people started to look into the entire camera pipeline for alternative CFA solution and gradually realized that using the traditional RGGB ISP (Image Signal Processing) to process the alternative CFA sensors may not be the optimized approach. As a result, new ISP architecture and algorithms have been developed that is able to handle the alternative CFA correctly and resolve the color accuracy issue. This new ISP technology for alternative CFA allows ADAS applications to take full advantage of the improved low light SNR provided by the non-RGB CFA sensors without the side effect of poor color reproduction and most importantly without the Red / Yellow traffic light discrimination issue.

Regarding the impact on the dynamic range, theoretically the alternative CFA will reduce the dynamic range by about 4% due to the 1.8x sensitivity increase over the RGGB. But since almost all the latest ADAS HDR sensors are able to reach the dynamic range of 140dB (some of them are even able to hit 150dB), plus the 1.8x sensitivity advantage will also push the noise floor further down, so in practice the alternative CFA’s impact on dynamic range is not significant.

AUTOSENS USA 2024 AGENDA SESSION

VP & Chief Technology Officer – Vision BU

indie Semiconductor

Optical alignment challenges and how we can address them

We asked Dirk Seebaum, Product Manager at TRIOPTICS: Can you explain how advancements in sensor technology have improved image quality and sensitivity in cameras for ADAS? What considerations are important when selecting sensor types and sizes for ADAS camera systems?

Advanced driver assistance systems (ADAS) have evolved rapidly to increase safety and improve the driving experience. A key contributor to this development is the continuous improvement of camera technology, particularly through image sensors with higher resolutions, allowing objects to be recognized at greater distances or covering a wider field of view (FOV). However, these advances are not without their challenges, particularly in terms of optical alignment of the camera module in order to maintain image quality at a high level.

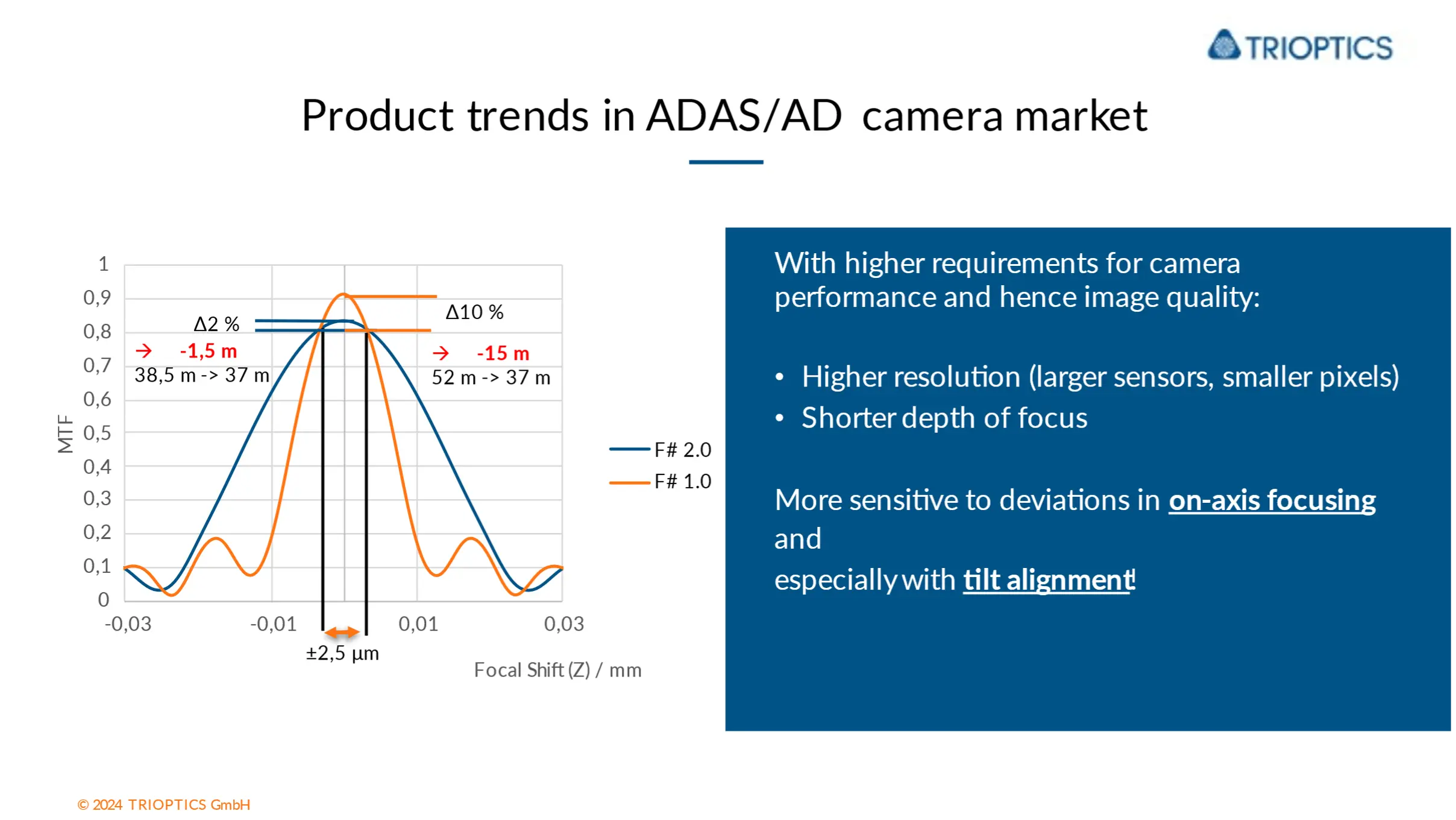

The quest for higher resolution in ADAS cameras has led to the development of larger image sensors with smaller pixel sizes. In addition, smaller f-numbers allow larger apertures to illuminate the sensor area, capture more light and improve image quality. One of the consequences of these advancements is the narrowing of the trough focus curve. The trough focus curve represents the depth of focus, indicating the range within which objects appear in sharp focus. A narrower curve means that the image quality is more sensitive to changes in the z-direction (distance from the lens to the imager) and has a particular effect on the tilt alignment with larger sensor areas. This increased sensitivity requires more precise optical alignment in order to achieve optimum sharpness across the entire image.

Fig. 1: Through focus cure and principal effects on MTF

The impact on image quality with a lens shift of ±2.5 µm in the z-direction can be expressed by a loss of object detection capabilities, in this case (Fig. 1) from 38.5 to 37 m compared to 52 to 37m. In other words, the improvement in camera design is lost due to misalignment of the lens and imager.

As ADAS camera technology continues to advance, the margin for error in optical alignment diminishes. Even slight misalignments can significantly reduce image quality. Achieving and maintaining precise alignment requires high-performance process and technology for mass-produced camera systems. This is particularly crucial in ADAS applications, where accurate and reliable image data is essential for real-time decision-making.

Addressing these challenges will require continuous innovation in camera design, manufacturing processes, and quality assurance protocols. By investing in these areas, ADAS manufacturers can ensure that their systems deliver the highest level of performance, safety, and reliability for drivers worldwide.

AUTOSENS USA 2024 AGENDA SESSION

Dirk Seebaum

Product Manager

TRIOPTICS

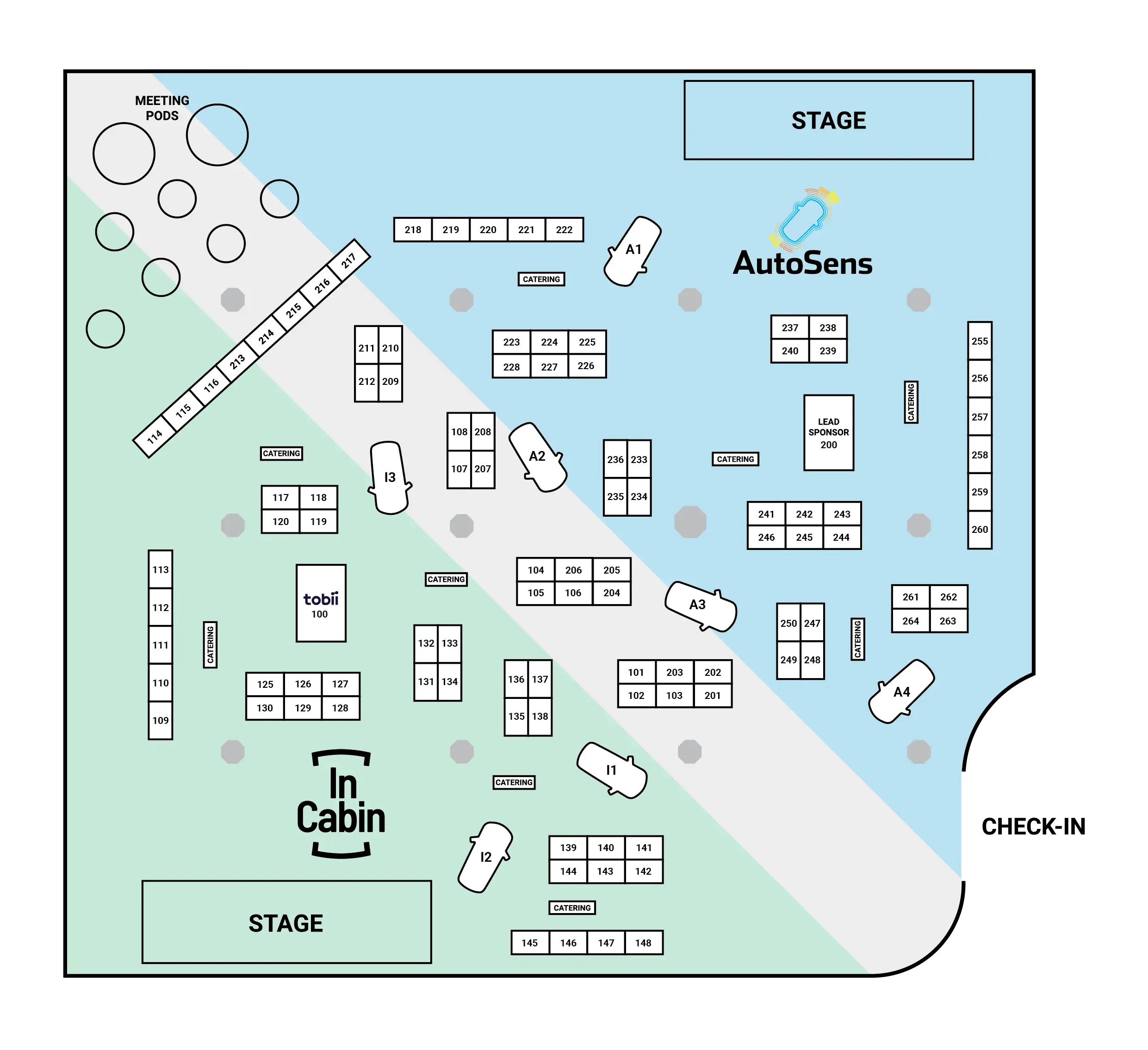

Connect on this topic in the exhibition

AutoSens and InCabin include a technology exhibition with an array of technical demonstrations, vehicle demonstrations, buck demos from world leading companies. Engineers who come to AutoSens and InCabin have the opportunity to not only discuss the latest trends but actually get their hands on the tech and see it in action.

Ready to join the adventure and dive into the technical world of ADAS and AV technology? Our USA exhibition will feature all these companies and more at the cutting edge of this technology.