In this second edition of the CES takeaways by myself (Sara Sargent) and Kayleigh Pearson we cover innovations discovered from Samsung, Smart Eye, STMicroelectronics, AIRY3D, Cipia, and Sony+ Siemens at CES. I'm kicking this blog off first with a breakdown of regulation meets Software Defined Vehicles - then enjoy the company updates and of course, tune in again for the next release!

Regulation meets Software Defined Vehicles

The prevalence of Software Defined Vehicles at CES was so constant that it doesn’t feel worth mentioning, it just feels natural. That said, I think there’s a lot of confusing comments so I address that confusion here.

This week I saw commentary on Tesla’s recall saying this is bad news for SDVs. I disagree, but unfortunately until the industry agrees a new terminology it will continue to be an issue. – here is why:

We are applying the term recall which has an existing, very specific meaning in auto, to something with a small but impactful difference.

Basics:

- When we say Software Defined Vehicle, it means the car was designed from the beginning to receive software updates – OTAs (Over The Air – often used to substitute “over the air updates”) – regularly throughout it’s entire life.

- People who develop OTA’s do so because they know it is going to have things that need to be addressed with Firmware. Firmware is Software that changes the behavior of Hardware. To get Firmware onto your Hardware you can either connect a cable physically to the device, or you can send it over a cellular connection. That same process happens all the time, whether it is a planned update, bug fix, or new feature. This is what allows us to get new features in our SDV.

- With a traditional vehicle, when something went wrong with the backup camera for instance, and there was a recall, that meant you as a consumer would have to take your car in to the dealership and have it replaced or fixed. This was an interruption to your schedule, transportation, possibly budget. The OEM has to figure out there is a recall needed, and then figure out how to fix it, and send out what ever parts to all the dealerships. And people also need to handle the scheduling etc. taking weeks or months to get to all the fixes. With a SDV, for many things you can literally press a button and fix it with an OTA package instead while you’re asleep. When I hear recall, I think of the inconvenience to me as a consumer. But that is not the same thing with an OTA recall, it’s just a button press – although the thing that is being recalled could be an inconvenience in either case.

- Next is reliability. I am not an expert on this, but when an OEM did a traditional recall, I bet in most cases they knew the fix well and once they start executing the fix, they expect that it shouldn’t be a problem again. With an OTA recall we’ve seen multiple instances in the last few months where companies put out a first OTA fix and then a few days later owners were having problems. Alternatively, the OTA updates are a normal function, the car is designed so that it can fix issues with an OTA package, so the process itself is like scheduled maintenance, just like an oil change. Where for the OEMs they will have needed to set up new processes, introducing opportunity for error. During a traditional recall not all owners will actually take the fix, where with SDVs they can at least easily tell which vehicles don’t have the fix and act accordingly.

- Of course, safety. That is the same – when I hear recall I think unsafe. A disadvantage for those making SDVs is that they do not have the benefit of years of regulatory insight on upcoming requirements. For traditional vehicles, regulations are made a few years in advance of when the OEMs need to implement. They are experienced in regulating traditional vehicles. In the case of SDVs they also need to follow those regulations, but because of the lack of regulation around SDVs they are making rules in real time. One reason for lack of regulation is because it extremely challenging for regulators to be educated enough on the outcomes for their constituents. They don’t know all the technical terms, how SW behaves on the road, or how the sensors work. I believe they are working on understanding that, but you can see why it would be irresponsible for them to make laws they don’t quite understand. That’s why it is important that people with this knowledge spend time with their regulators to teach them what they need to know to make these rules. Once regulators discover that they think something is unsafe in a SDV they can issue a recall. So in this case it’s not always that something is working unexpectedly, it could be that the regulators are just catching up to the technical capabilities and are doing their best to keep our roads safe in real time. Finally on safety, this shouldn’t just be up to regulation, it should be good engineering and even better, responsible business leadership from OEMs, Tier 1s, and the full supply chain.

To summarize, what they’ve done is when an OTA SW/FW package is sent due to regulation or something NHTSA deems unsafe, then they call the OTA a ‘recall’, when it’s sent for maintenance they call it an ‘update’.

The headlines are making it sound like the recalls are something different than what they are, and people don’t understand it’s a software update. I hope this helps you read between the lines on what is sensational, and what is worth educating yourself about.

Now, more about the companies who are enabling amazing roadway experiences and what we learned at CES!

Garmin

Garmin demonstrated their in-cabin user interface layer capabilities which used a predictive engine to enable convenience. For instance, if you usually leave your bag on seat when you drive to work in the morning, it detects you are stressed, it can ask you if you’re missing your bag. Their Qualcomm enabled Unified Cabin runs on one instance of Android and with the Garmin Cabin App one user can control all screens in the vehicle, including screen sharing. As a mom, Kayleigh said this would be really useful for long car trips out of town. They can detect “zones” in the cabin for things like the gaming controller, so that the screen showing the game can move with the controller.

Garmin demonstrated their in-cabin user interface layer capabilities which used a predictive engine to enable convenience. For instance, if you usually leave your bag on seat when you drive to work in the morning, it detects you are stressed, it can ask you if you’re missing your bag. Their Qualcomm enabled Unified Cabin runs on one instance of Android and with the Garmin Cabin App one user can control all screens in the vehicle, including screen sharing. As a mom, Kayleigh said this would be really useful for long car trips out of town. They can detect “zones” in the cabin for things like the gaming controller, so that the screen showing the game can move with the controller.

Garmin is using UWB in the car as most devices like controllers and smartphones already have UWB connectivity capabilities. They even provide device tags so you don’t need to fuss with the device settings yourself. The car of course has wifi so you can cast if you wish and the demo had Dolby Atmos sound which when partnered with the screen in the rear seat, felt like being in the movie theater. The experience was all encompassing and a long way from the mini TV my family had for a short time in our conversion van.

They also had themes you could select to make your screen more personalized. We chose Tie Fighter of course which was super cool, highly recommend. Their system is safe for the Android 14 update. We really enjoyed this demo.

Cipia

“I was particularly impressed with the performance of Cipia’s DMS software at CES 2024. The stability of the core signals was substantially above what was expected, especially considering I was testing wearing a baseball cap, KN95 face mask and polarized sunglasses. That is one of the toughest edge cases of all, so this is really excellent work by the engineering team.” – Colin Barnden, Semicast Research

High praise from Colin, and I am especially impressed as well that they were able to detect with all of the facial blocks. Cipia had a car at their stand for CES where they demonstrated their occupancy monitoring software – Cipia Cabin Sense, which is expected to hit the roads during 2024. The demo used a wide FOV camera to capture both driver and passengers, showing which seats were occupied, and perform facial recognition throughout the cabin, as well as detecting the body positions as well.

Cipia also showed their Driver Sense DMS, and its Cipia-FS10 video telematics and driver monitoring device for fleets.

Personally, as someone obsessed with the in-cabin industry it was really fun to see the big successes for Cipia over the last year, they added 4 new OEM customers and more than doubled the number of models. In total Cipia Driver Sense has been selected by 9 OEMs for 61 models in the US, EU and China, and is in serial production. I think it shows great progress of the tech throughout the industry. They also have an engaging team who is ready and happy to answer any and all questions. We look forward to seeing more from Cipia at InCabin in Detroit and Barcelona this year.

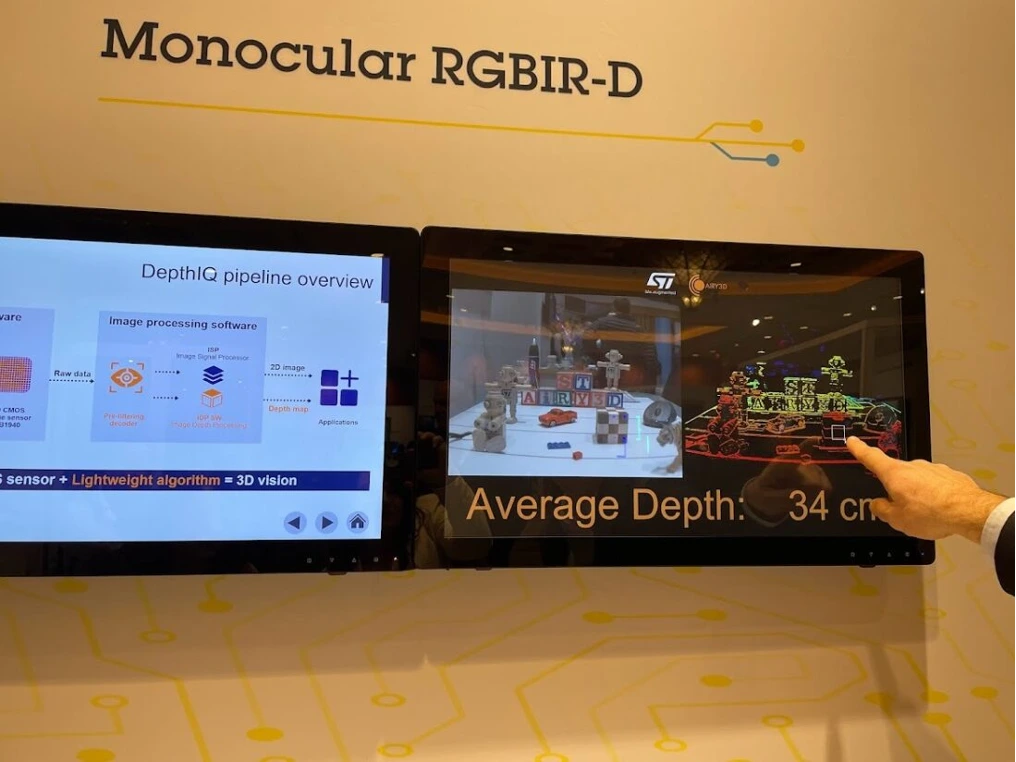

Cyrille Trouilleau and Chris Varlamos at STMicroelectronics showed us several demos in their suite that demonstrated their capability to detect all types of objects and movement in real time and in 3D space.

There’s something especially charming about this CES for STMicro. Cyrille explained that he had seen the show at the Sphere, and that it was a must-visit experience. If I understand it correctly, it is STMicro that supplies the image sensor for the cameras that film the footage used to create the experiences in the Sphere.

Cyrille Introduced their solution which is a monocular RGBIR-D called “Depth IQ”. This is targeted to be used to meet expected EuroNCAP requirements for 3D pose detection for safe deployment of airbags.

From collaborator Airy3D, the system uses a TDM – Transmissive Diffraction Mask and a lightweight algorithm providing the depth map. This is the first non-stereo option from STMicroelectronics, which of course saves on cost and makes for easier integration.

They are releasing VL53L8 DToF to detect things like movement of a human and air quality issues like smoke. They showed us multi-zone time of flight based presence detection. In this demo the screen showed several zones of which we could be detected in. Kayleigh and I both got in the detection area and were detected in real time in different zones as we moved around.

STMicroelectronics also takes my prize for most hidden Suite. I won’t give them away, but it took us a very long time through mazes to find them, but as expected it proved to be a treasure well discovered.

I met with Jean-Sebastian from AIRY3D, who explained that they have been around for about 8 years, are located in Montreal, and have investments from Bosch Venture Capital and Intel Capital. They are providing monocular 3D vision using standard CMOS image sensors.

He described the three main ways of performing 3D detection being stereo vision, time of flight, and structured light.

AIRY3D DepthIQ uses a TDM, Transmissive Diffraction Mask which is a coating on the image sensor itself to encode depth information from light. They also provide the lightweight 3D data extraction algorithm. They partnered with STMicroelectronics on their existing automotive 5MP image sensor that you would have seen at AutoSens and InCabin in the past. Now with the AIRY3D solution, the 2D imager can provide 3D data and these upgraded image sensors can be a simple drop in replacement if you’re already using the image sensor.

Having the single image sensor offers a lot of benefits like saving on cost, space, heat dissipation, and is simpler from a compute perspective as well. With this solution machine vision applications can be augmented to include 3D information, meaning the system has higher confidence and lower error rates. The demo was really impressive, working very quickly, in real time and with such accuracy.

SmartEye

“Smart Eye’s booth at CES provided a hands-on insight into how Driver Monitoring Systems can enhance a vehicle’s capabilities.

While taking a simulated drive, the software would instantaneously detect if the driver’s focus strayed from the road, highlighting the crucial safety benefits of the sensors and in-car cameras it was equipped with. In addition, when leaving the mock car, it was great to see how efficiently the system would notify you if you left behind an item such as a backpack or phone, or more importantly an infant!

I also thoroughly enjoyed seeing how these systems can be used to provide a more personalised experience for the consumer. For example, the technology could detect with surprising accuracy how much I liked or disliked a song played over the car’s speakers based on subtle facial expressions. Meanwhile, if desired, the system could follow my gaze and provide me with useful information on bulletins and adverts that I saw along the route, such as supplying directions.

With the new General Safety Regulations mandating the use of DMS in new vehicles, the potential of this technology is a hot topic that I hear and write about often as a journalist in the field. Seeing it in action at the Smart Eye booth at CES was therefore a valuable experience that helped bring its potential to life as technology that is active and effective, rather than merely a futuristic concept.”

– Tiana May, Future Transport-News

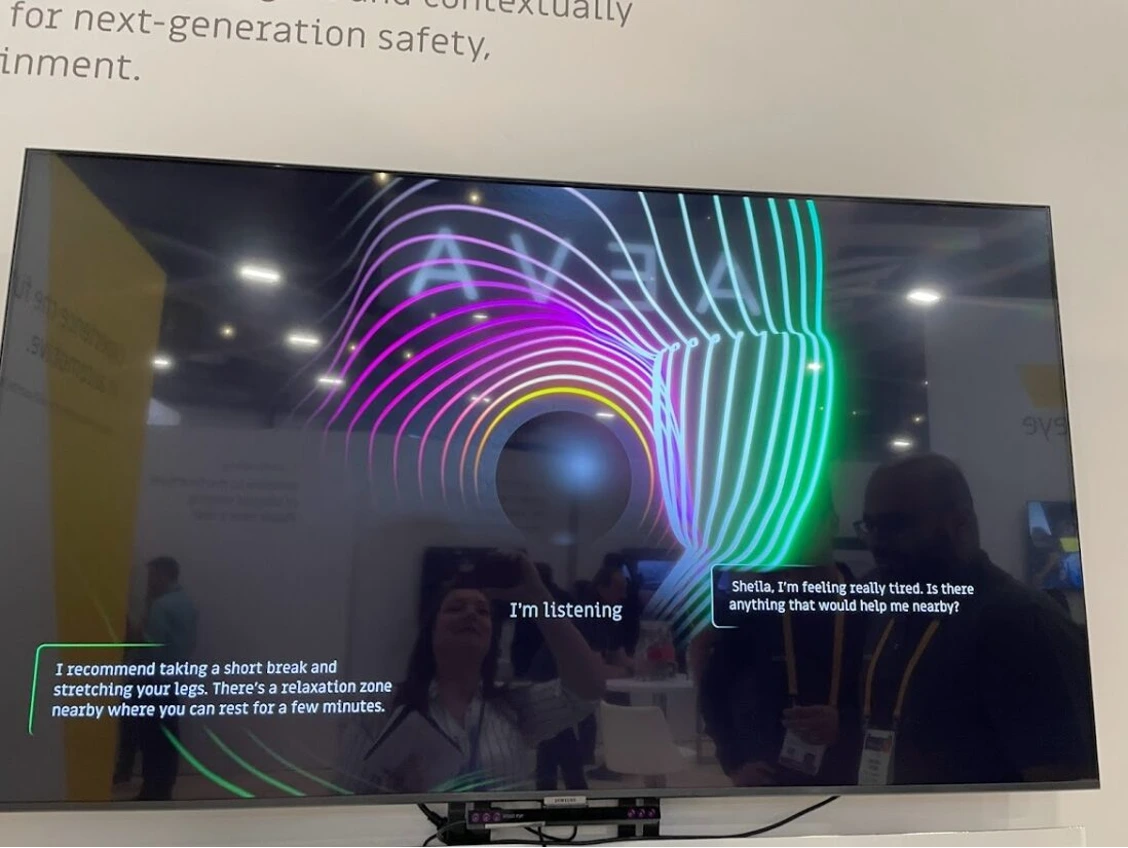

The Smart Eye LLM demo was excellent at getting me to engage in the real life applications where I would realistically see myself using this. And I have to add that the enthusiasm of the Smart Eye team is infectious. They are to the point, happy, clear and proud of the systems they deliver. During the LLM demo I chatted a bunch with their system to see what I could get it to do for me. It was a great time and did a good job at keeping me entertained. Part of the demo was explaining that the LLM could be used to have a conversation with you to help keep you awake if you were getting drowsy. They explained that talking is a known factor in overcoming drowsiness. The LLM can also take in your emotion state that is used by the DMS and know if you are stressed, disgusted, happy, etc. and then accommodate that to set the right environment for the safest driving scenario with changes to light, temperature, audio, and now conversation or suggestions. It could even tell the passenger to wake you up, remind you to stay focused “child in vehicle” if you are texting and driving or being otherwise irresponsible.

Smart Eye has been using their own synthetic data tool that is deep learning based and can be positioned in various locations and can account for a different angles of the sun, among many other parameters. This tool is available for those OEM partners who work with Smart Eye so that they can use it to measure the performance of their tracker, not to be used for training. Of course their tool has the advantage that many associate with simulation tools, which is time and cost saving.

Smart Eye also had a buck demo where they featured various car modes for in-cabin. Modes included keywords like family (switch view to kids in the rear seats), business call (answer and hang up with gaze), entertainment (gesture control for movies), safety (can send alert if someone passes out).

There were very well thought out next stage applications at the Smart Eye booth at CES this year. Kayleigh didn’t get to do this one with me so she is really looking forward to their demo in Detroit at InCabin!

Samsung

We were greeted so warmly at the Samsung suite, which was the 2nd most hidden of all the suites I visited by the way, it was a wonderful way to kick off our CES private tours. Thanks so much to the Samsung team for the wonderful greeting. Samsung showed lots of their new technology for a variety of industries.

They showed Exynos Auto V920, headlined as their premium processor for intelligent and pleasant driving, using the ARM Cortex 78 with concurrent processing available, enabling up to 6 displays and 12 cameras. They have their Hypervisor on 2 OS’s, Linux for AI and ADAS, and Android for gaming and 4K video. They showed a Highway Driving Assistant where the inferencing is done on the chip.

Of course we really liked the demo of their DMS camera and their in-cabin IR sensor with in-house drowsiness detection, eye gaze tracking, yawn detection, all done in real time on the chip.

They were very excited to share that they are opening a new fab in Taylor, TX, spending $17B

We really enjoyed the event-based monitoring demo where there was a little skier going around a mountain and the visualizer showed that the system zoomed in on the moving object.

They also shared a memory solution that boasted being a low power, high performance automotive solution with capacity up to 24GB, targeting ADAS and infotainment.

Samsung certainly showed up to CES with a very diverse and full portfolio of solutions for automotive, so much to see, this barely covered all of the technology we saw and experienced at Samsung’s suite. Keep an eye on them!

Sony + Siemens (AR 3D CAD/Simulated engineering workshop)

We really enjoyed the visit to the Siemens booth where Ben Widdowson and David Taylor told us about their Immersive Design Environment . Siemens has partnered with Sony on this AR system that enables engineers to develop their 3D designs in 3D space. The system includes a headset with eye tracking and 3D display, as well as a sensor you wear on your hand to manipulate the design.

This system is used by the Red Bull Racing & Red Bull Technology team for their steering wheel. The benefit for the Redbull racing team is time. They have a week to make changes and its not possible to physically build changes in that short time. Simulation and this system especially, is designed to allow the stakeholders to get as close to the feel of the outcome as possible.

Inspired by Tony Stark, there’s a ton more to know about this system so check it out.

Now who wants to see this at AutoSens and InCabin this year??

In conclusion…

Last year CES showed us that in-cabin was indeed at the front of companies’ minds, as most T1s had an in-cabin display.

This year I’ve seen that companies who want to show what they offer for in-cabin think the experience at their booth is really impactful. I found that many companies had something for us to get into and sit it and feel something. At Garmin it was the audio experience and capability for my Mum (KP) in the front seat to control my screen in real time. At Socionext, Cipia, Smart Eye, and Mitsubishi they demonstrated their DMS, OMS, CPD with various modalities.

At Forvia it was about the peace and calm experience of sitting in their sustainable chair, hearing the audio coming from the headrest and the haptic feedback (gentle vibration) of the chair to match the audio experience. I could go on an on. I think this is an excellent sign for what to look forward to at InCabin & AutoSens this year.

Ready to meet companies at the cutting-edge of ADAS and AV technology? Join us at AutoSens in Detroit 21-23rd May, get your pass here.

Is InCabin more relevant to you? Get your USA pass here.