A closer look into the making of the Eyesight Driver Assist Platform by Subaru

By Joseph Notaro, VP, WW Automotive Strategy and Business Development, ON Semiconductor

It is probably no secret that the automotive market is extremely demanding and competitive. In order to justify the cost of introducing new features, a manufacturer needs to be confident that the consumer is willing to purchase them. The continued development of ADAS is a good example of this, because it addresses two of the key features that buyers value; convenience and safety.

ADAS is increasingly being recognized as improving safety. The EyeSight® system developed by Subaru® has received independent approval from industry experts and vehicle owners for the way it integrates with other systems to deliver both convenience and safety. This system uses stereoscopic machine vision to identify road conditions, traffic signals and potential hazards, and uses this information to control the vehicle’s speed, as well as alert the driver when they need to take action.

What is particularly interesting about the EyeSight system is how closely Subaru and ON Semiconductor® worked together to develop the solution. This is unusual in a sector that is so vertically integrated. Hierarchically, it is arranged in tiers. Normally, an automotive manufacturer would work predominantly with Tier 1 suppliers who, in turn, work with Tier 2 suppliers and so on. ON Semiconductor supplies to Tier 1 and Tier 2 manufacturers.

In this case, ON Semiconductor had direct technical engagement with the automaker, because Subaru itself was very involved in the algorithm development and sensor selection to optimize the overall system performance to their vehicle level requirements. This system was architected as part of the vehicle capabilities rather than added onto a vehicle to enhance capabilities.

The EyeSight system employs two AR0231AT CMOS image sensors. These form the forward-facing ‘eyes’ of the ADAS system, looking at the road ahead. ON Semiconductor is also supplying the image sensors used in the previous generation of the EyeSight system, the AR0132AT. ON Semiconductor has now shipped over 120 million image sensors for camera-based ADAS.

The benefits of close collaboration

Improving the performance of an ADAS like the EyeSight system has to be addressed at every point in the signal chain, from image capture to data analysis. To avoid the classic problem where poor quality data causes poor system algorithm performance, sometimes referred to as “garbage in, garbage out”, Subaru focused on choosing a high-performing sensor to ensure high quality data going into their high-performance algorithms, engineering a “performance in, performance out” architecture from the ground up.

Data, in the classic sense, is a stream of binary digits, but that stream needs to originate somewhere. In ADAS, the point of origin is the sensors used to monitor the real world (inside and outside the vehicle). The quality of the sensor directly dictates the quality of the data. The AR0231AT has an impressive data sheet, by any manufacturer’s standards. But an OEM like Subaru, who is used to working with Tier 1s that supply complete systems, isn’t really interested in the figures on a data sheet for an image sensor, no matter how impressive they may be. What they are interested in, understandably, is the quality of the data and how these data can help them achieve the vehicle system performance they require.

Understanding the application domain

In order to fully understand the customer’s needs, engineers at ON Semiconductor had to become more familiar with the end application. Since the two engineering teams were working so closely, this knowledge exchange was fully supported on both sides.

It involved taking a requirement and translating that into sensor performance. It may sound simple but in fact it requires a deep understanding of the issues, from both points of view, and some way of creating a common language that could bridge the two domains.

For example, Subaru needed the system to be able to read traffic signals in all light conditions. Before the advent of LED technology, streetlights would likely be powered directly from an AC source at a line frequency of 50 Hz or 60 Hz. Traditional lights on a vehicle would have been driven by 12 V DC, and so would be in a steady state. LED technology has changed all of that and, now, LEDs are used in both street lighting and vehicles. These devices are often driven by a high-frequency modulated square wave, which means they are likely to turn on and off at various frequencies. There is no common standard for what frequency should be used and, in fact, the frequency will be dependent on many things, including the level of light emission needed. This could change under different environmental conditions, and so the rate at which the LEDs flicker can vary drastically.

Since CMOS image sensors operate at high capture rates, there is increased probability that the image will not be fully captured when many of the LEDs are switching on and off. If too many LEDs are off at capture, the image may show that the light is off, or fail to recognize the signal. In order to address the customer’s requirements, it was necessary to understand the relationship between LED flicker and the quality of the data fed to the ADAS. More importantly, it was essential that engineers at ON Semiconductor could employ some way of mitigating the impact of LED flicker in the sensor, to the point that it was no longer a limiting factor in the system’s overall performance.

LED flicker mitigation (LFM) is a feature available in the CMOS images sensors by ON Semiconductor, qualified for use in the automotive industry. However, it was still necessary for the engineering team to modify and improve the flicker mitigation in the AR0231AT in order to achieve a definition of acceptable. In fact, engineers at ON Semiconductor went beyond acceptable, to deliver a sensor that surpassed even the customer’s expectations.

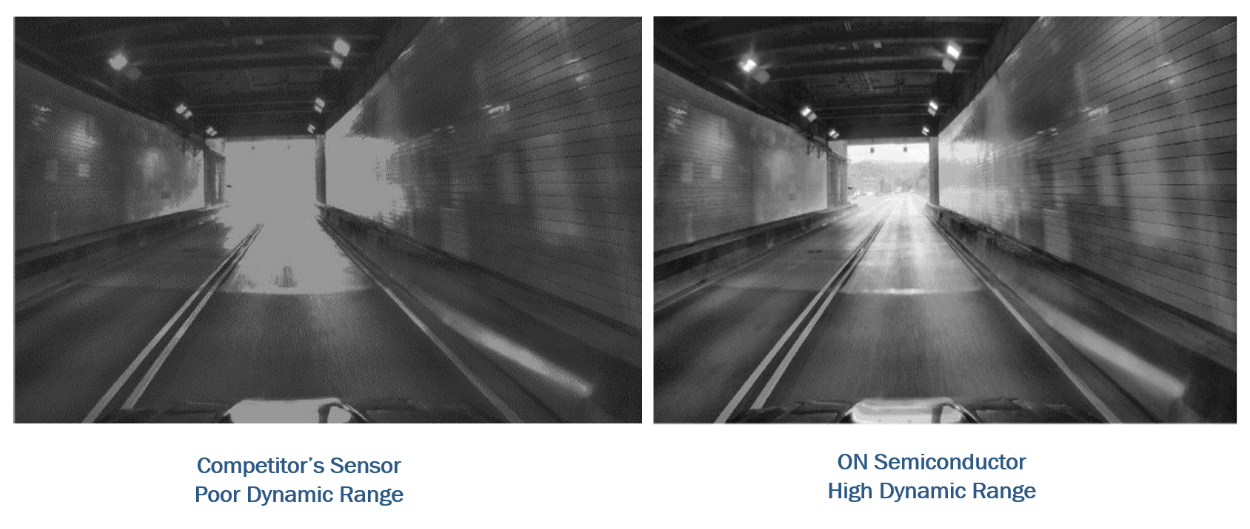

Other examples of how the sensor had to be modified to meet the customer’s requirements include improving the dynamic range and increasing the field of view. Again, these were not the direct requests made by the customer, they were the indirect solutions that engineers at ON Semiconductor needed to implement, so they could meet the customer’s requirements.

In this case study it is clear that working through a Tier 1 and, possibly a Tier 2 and even Tier 3 supplier, would have made it extremely difficult for any semiconductor manufacturer to have interpreted the end customer’s requirements accurately enough in order to affect the changes needed.

This is where the benefits embodied by the philosophy of ON Semiconductor – ‘you bring the talent, we’ll bring the tools’ really becomes apparent. By working closely with Subaru, ON Semiconductor was able to deliver a product that surpassed expectations. This is just one example of how ON Semiconductor is now engaging with customers at every level, but it isn’t the only example. Moving forward, the expectation is for even closer collaboration with OEMs at the top of their vertical market in close triangulation with system integrators, because that’s how real technological breakthroughs are made.